Given the current macro-economic environment, where should investors focus their search for sources of alpha in the year ahead? By asking enough economists or investment managers you will find as many different opinions on the subject as would care to, no doubt many of them conflicting. These are some thoughts on the subject from my perspective, as a quantitative hedge fund manager.

Global Market Performance in 2017

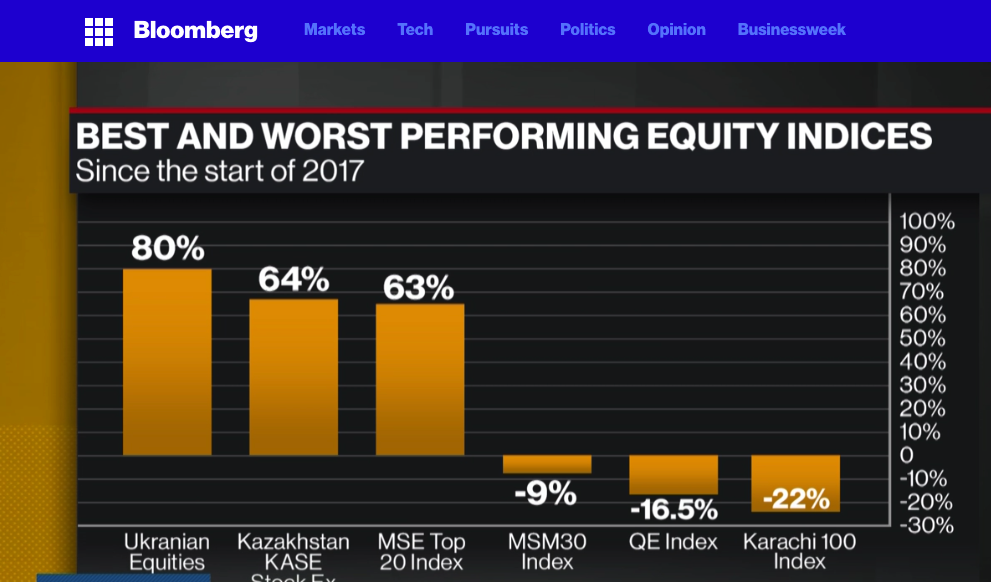

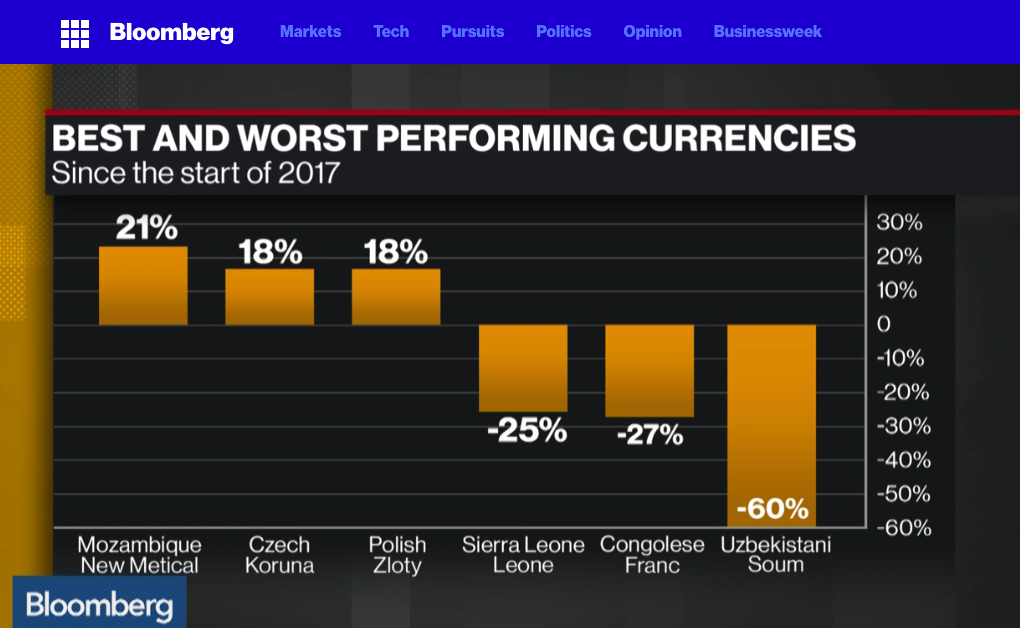

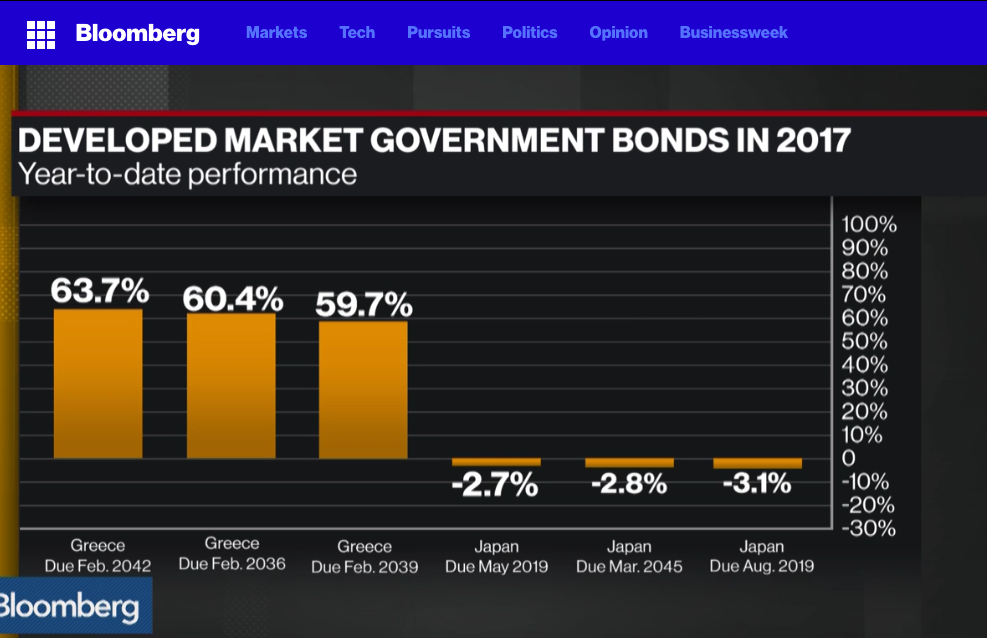

Let’s begin by reviewing some of the best and worst performing assets of 2017 (I am going to exclude cryptocurrencies from the ensuing discussion). Broadly speaking, the story across the piste has been one of strong appreciation in emerging markets, both in equities and currencies, especially in several of the Eastern European economies. In Government bond markets Greece has been the star of the show, having stepped back from the brink of the economic abyss. Overall, international diversification has been a key to investment success in 2017 and I believe that pattern will hold in 2018.

US Yield Curve and Its Implications

Another key development that investors need to take account of is the extraordinary degree of flattening of the yield curve in US fixed income over the course of 2017:

This process has now likely reached the end point and will begin to reverse as the Fed and other central banks in developed economies start raising rates. In 2018 investors should seek to protect their fixed income portfolios by shortening duration, moving towards the front end of the curve.

US Volatility and Equity Markets

A prominent feature of US markets during 2017 has been the continuing collapse of equity index volatility, specifically the VIX Index, which reached an all-time low of 9.14 in November and continues to languish at less than half the average level of the last decade:

Source: Wolfram Alpha

One consequence of the long term decline in volatility has been to drastically reduce the profitability of derivatives markets, for both traders and market makers. Firms have struggled to keep up with the high cost of technology and the expense of being connected to the fragmented U.S. options market, which is spread across 15 exchanges. Earlier in 2017, Interactive Brokers Group Inc. sold its Timber Hill options market-making unit — a pioneer of electronic trading — to Two Sigma Securities. Then, in November, Goldman Sachs announced it was shuttering its option market making business in US exchanges, citing high costs, sluggish volume and low volatility.

The impact has likewise been felt by volatility strategies, which performed well in 2015 and 2016, only to see returns decline substantially in 2017. Our own Systematic Volatility strategy, for example, finished the year up only 8.08%, having produced over 28% in the prior year.

One side-effect of low levels of index volatility has been a fall in stock return correlations, and, conversely, a rise in the dispersion of stock returns. It turns out that index volatility and stock correlation are themselves correlated and indeed, cointegrated:

http://jonathankinlay.com/2017/08/correlation-cointegration/

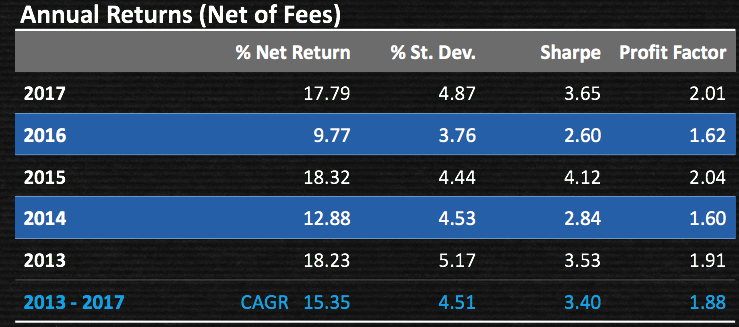

In simple terms, stocks have a tendency to disperse more widely around an increasingly sluggish index. The “kinetic energy” of markets has to disperse somewhere and if movements in the index are muted then relative movement in individual equity returns will become more accentuated. This is an environment that ought to favor stock picking and both equity long/short and market neutral strategies should outperform. This certainly proved to be the case for our Quantitative Equity long/short strategy, which produced a net return of 17.79% in 2017, but with an annual volatility of under 5%:

Looking ahead to 2018, I expect index volatility and equity correlations rise as the yield curve begins to steepen, producing better opportunities for volatility strategies. Returns from equity long/short and market neutral strategies may moderate a little as dispersion diminishes.

Futures Markets

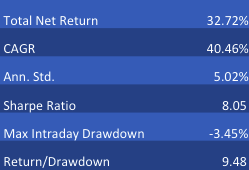

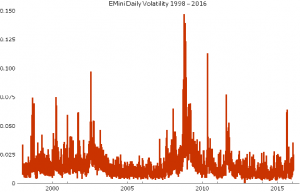

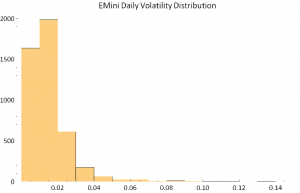

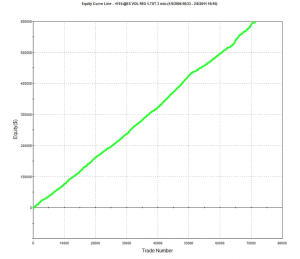

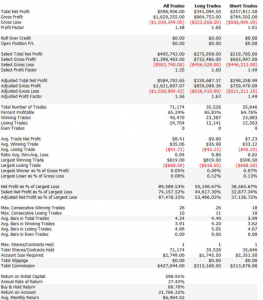

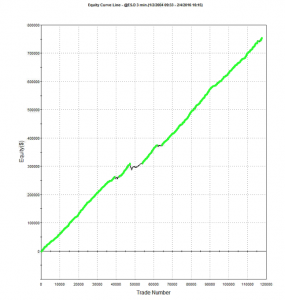

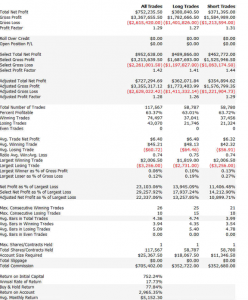

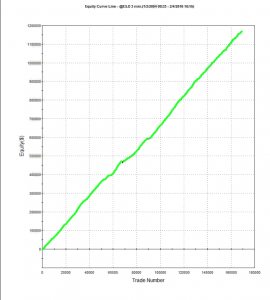

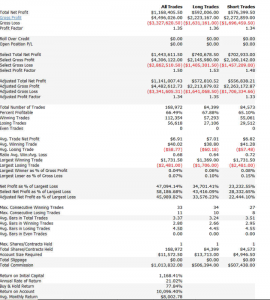

Big increases in commodity prices and dispersion levels also lead to improvements in the performance of many CTA strategies in 2017. In the low frequency space our Futures WealthBuilder strategy produced a net return of 13.02% in 2017, with a Sharpe Ratio above 3 (CAGR from inception in 2013 is now at 20.53%, with an average annual standard deviation of 6.36%). The star performer, however, was our High Frequency Futures strategy. Since launch in March 2017 this has produce a net return of 32.72%, with an annual standard deviation of 5.02%, on track to generate an annual Sharpe Ratio above 8 :

Looking ahead, the World Bank has forecast an increase of around 4% in energy prices during 2018, with smaller increases in the price of agricultural products. This is likely to be helpful to many CTA strategies, which will likely see further enhancements in performance over the course of the year. Higher frequency strategies are more dependent on commodity market volatility, which is seen more likely to rise than fall in the year ahead.

Conclusion

US fixed income investors are likely to want to shorten duration as the yield curve begins to steepen in 2018, bringing with it higher levels of index volatility that will favor equity high frequency and volatility strategies. As in 2017, there is likely much benefit to be gained in diversifying across international equity and currency markets. Strengthening energy prices are likely to sustain higher rates of return in futures strategies during the coming year.