What is a Meta-Strategy?

In my previous post on identifying drivers of strategy performance I mentioned the possibility of developing a meta-strategy.

A meta-strategy is a trading system that trades trading systems. The idea is to develop a strategy that will make sensible decisions about when to trade a specific system, in a way that yields superior performance compared to simply following the underlying trading system. Put another way, the simplest kind of meta-strategy is a long-only strategy that takes positions in some underlying trading system. At times, it will follow the underlying system exactly; at other times it is out of the market and ignore the trading system’s recommendations.

A meta-strategy is a trading system that trades trading systems. The idea is to develop a strategy that will make sensible decisions about when to trade a specific system, in a way that yields superior performance compared to simply following the underlying trading system. Put another way, the simplest kind of meta-strategy is a long-only strategy that takes positions in some underlying trading system. At times, it will follow the underlying system exactly; at other times it is out of the market and ignore the trading system’s recommendations.

More generally, a meta-strategy can determine the size in which one, or several, systems should be traded at any point in time, including periods where the size can be zero (i.e. the system is not currently traded). Typically, a meta-strategy is long-only: in theory there is nothing to stop you developing a meta-strategy that shorts your underlying strategy from time to time, but that is a little counter-intuitive to say the least!

A meta-strategy is something that could be very useful for a fund-of-funds, as a way of deciding how to allocate capital amongst managers.

Caissa Capital operated a meta-strategy in its option arbitrage hedge fund back in the early 2000’s. The meta-strategy (we called it a “model management system”) selected from a half dozen different volatility models to be used for option pricing, depending their performance, as measured by around 30 different criteria. The criteria included both statistical metrics, such as the mean absolute percentage error in the forward volatility forecasts, as well as trading performance criteria such as the moving average of the trade PNL. The model management system probably added 100 – 200 basis points per annum to the performance the underlying strategy, so it was a valuable add-on.

Illustration of a Meta-Strategy in US Bond Futures

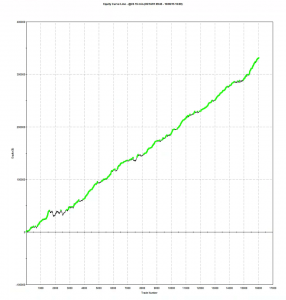

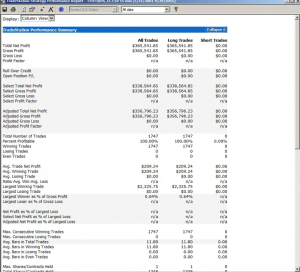

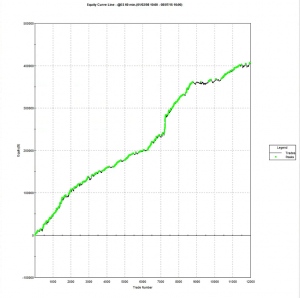

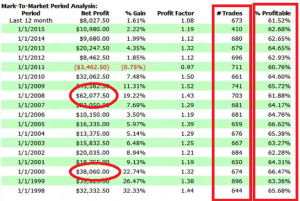

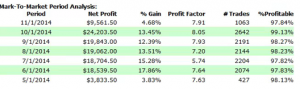

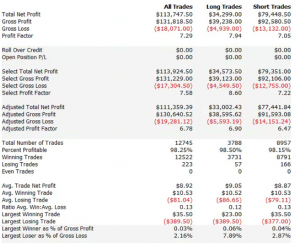

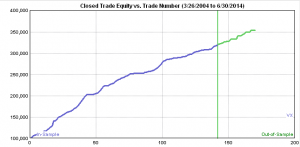

To illustrate the concept we will use an underlying system that trades US Bond futures at 15-minute bar intervals. The performance of the system is summarized in the chart and table below.

Strategy performance has been very consistent over the last seven years, in terms of the annual returns, number of trades and % win rate. Can it be improved further?

To assess this possibility we create a new data series comprising the points of the equity curve illustrated above. More specifically, we form a series comprising the open, high, low and close values of the strategy equity, for each trade. We will proceed to treat this as a new data series and apply a range of different modeling techniques to see if we can develop a trading strategy, in exactly the same way as we would if the underlying was a price series for a stock.

It is important to note here that, for the meta-strategy at least, we are working in trade-time, not calendar time. The x-axis will measure the trade number of the underlying strategy, rather than the date of entry (or exit) of the underlying trade. Thus equally spaced points on the x-axis represent different lengths of calendar time, depending on the duration of each trade.

It is necessary to work in trade time rather than calendar time because, unlike a stock, it isn’t possible to trade the underlying strategy whenever we want to – we can only enter or exit the strategy at points in time when it is about to take a trade, by accepting that trade or passing on it (we ignore the other possibility which is sizing the underlying trade, for now).

Another question is what kinds of trading ideas do we want to consider for the meta-strategy? In principle one could incorporate almost any trading concept, including the usual range of technical indictors such as RSI, or Bollinger bands. One can go further an use machine learning techniques, including Neural Networks, Random Forest, or SVM.

In practice, one tends to gravitate towards the simpler kinds of trading algorithm, such as moving averages (or MA crossover techniques), although there is nothing to say that more complex trading rules should not be considered. The development process follows a familiar path: you create a hypothesis, for example, that the equity curve of the underlying bond futures strategy tends to be mean-reverting, and then proceed to test it using various signals – perhaps a moving average, in this case. If the signal results in a potential improvement in the performance of the default meta-strategy (which is to take every trade in the underlying system system), one includes it in the library of signals that may ultimately be combined to create the finished meta-strategy.

As with any strategy development you should follows the usual procedure of separating the trade data to create a set used for in-sample modeling and out-of-sample performance testing.

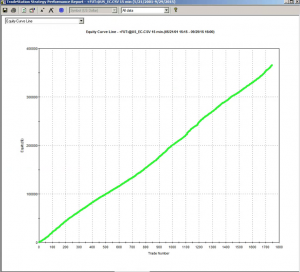

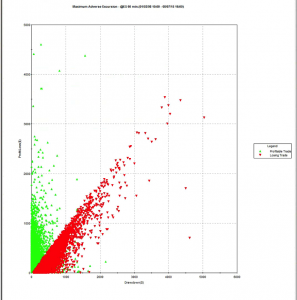

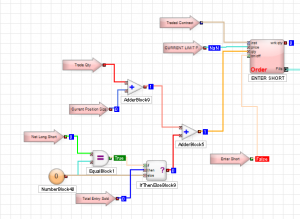

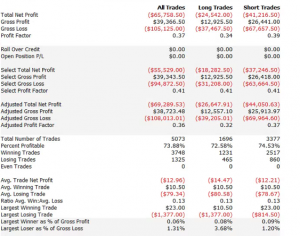

Following this general procedure I arrived at the following meta-strategy for the bond futures trading system.

The modeling procedure for the meta-strategy has succeeded in eliminating all of the losing trades in the underlying bond futures system, during both in-sample and out-of-sample periods (comprising the most recent 20% of trades).

In general, it is unlikely that one can hope to improve the performance of the underlying strategy quite as much as this, of course. But it may well be possible to eliminate a sufficient proportion of losing trades to reduce the equity curve drawdown and/or increase the overall Sharpe ratio by a significant amount.

A Challenge / Opportunity

If you like the meta-strategy concept, but are unsure how to proceed, I may be able to help.

Send me the data for your existing strategy (see details below) and I will attempt to model a meta-strategy and send you the results. We can together evaluate to what extent I have been successful in improving the performance of the underlying strategy.

Here are the details of what you need to do:

1. You must have an existing, profitable strategy, with sufficient performance history (either real, simulated, or a mixture of the two). I don’t need to know the details of the underlying strategy, or even what it is trading, although it would be helpful to have that information.

2. You must send the complete history of the equity curve of the underlying strategy, in Excel format, with column headings Date, Open, High, Low, Close. Each row represents consecutive trades of the underlying system and the O/H/L/C refers to the value of the equity curve for each trade.

3. The history must comprise at least 500 trades as an absolute minimum and preferably 1000 trades, or more.

4. At this stage I can only consider a single underlying strategy (i.e. a single equity curve)

5. You should not include any software or algorithms of any kind. Nothing proprietary, in other words.

6. I will give preference to strategies that have a (partial) live track record.

As my time is very limited these days I will not be able to deal with any submissions that fail to meet these specifications, or to enter into general discussions about the trading strategy with you.

You can reach me at jkinlay@systematic-strategies.com