Volatility Forecasting in Emerging Markets

The great majority of empirical studies have focused on asset markets in the US and other developed economies. The purpose of this research is to determine to what extent the findings of other researchers in relation to the characteristics of asset volatility in developed economies applies also to emerging markets. The important characteristics observed in asset volatility that we wish to identify and examine in emerging markets include clustering, (the tendency for periodic regimes of high or low volatility) long memory, asymmetry, and correlation with the underlying returns process. The extent to which such behaviors are present in emerging markets will serve to confirm or refute the conjecture that they are universal and not just the product of some factors specific to the intensely scrutinized, and widely traded developed markets.

The ten emerging markets we consider comprise equity markets in Australia, Hong Kong, Indonesia, Malaysia, New Zealand, Philippines, Singapore, South Korea, Sri Lanka and Taiwan focusing on the major market indices for those markets. After analyzing the characteristics of index volatility for these indices, the research goes on to develop single- and two-factor REGARCH models in the form by Alizadeh, Brandt and Diebold (2002).

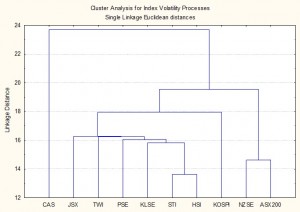

Cluster Analysis of Volatility

Processes for Ten Emerging Market Indices

The research confirms the presence of a number of typical characteristics of volatility processes for emerging markets that have previously been identified in empirical research conducted in developed markets. These characteristics include volatility clustering, long memory, and asymmetry. There appears to be strong evidence of a region-wide regime shift in volatility processes during the Asian crises in 1997, and a less prevalent regime shift in September 2001. We find evidence from multivariate analysis that the sample separates into two distinct groups: a lower volatility group comprising the Australian and New Zealand indices and a higher volatility group comprising the majority of the other indices.

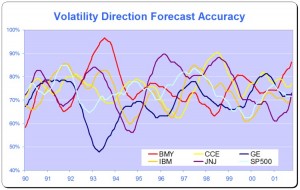

Models developed within the single- and two-factor REGARCH framework of Alizadeh, Brandt and Diebold (2002) provide a good fit for many of the volatility series and in many cases have performance characteristics that compare favorably with other classes of models with high R-squares, low MAPE and direction prediction accuracy of 70% or more. On the debit side, many of the models demonstrate considerable variation in explanatory power over time, often associated with regime shifts or major market events, and this is typically accompanied by some model parameter drift and/or instability.

Single equation ARFIMA-GARCH models appear to be a robust and reliable framework for modeling asset volatility processes, as they are capable of capturing both the short- and long-memory effects in the volatility processes, as well as GARCH effects in the kurtosis process. The available procedures for estimating the degree of fractional integration in the volatility processes produce estimates that appear to vary widely for processes which include both short- and long- memory effects, but the overall conclusion is that long memory effects are at least as important as they are for volatility processes in developed markets. Simple extensions to the single-equation models, which include regressor lags of related volatility series, add significant explanatory power to the models and suggest the existence of Granger-causality relationships between processes.

Extending the modeling procedures into the realm of models which incorporate systems of equations provides evidence of two-way Granger causality between certain of the volatility processes and suggests that are fractionally cointegrated, a finding shared with parallel studies of volatility processes in developed markets.

Download paper here.

Long Memory and Regime Shifts in Asset Volatility

This post covers quite a wide range of concepts in volatility modeling relating to long memory and regime shifts and is based on an article that was published in Wilmott magazine and republished in The Best of Wilmott Vol 1 in 2005. A copy of the article can be downloaded here.

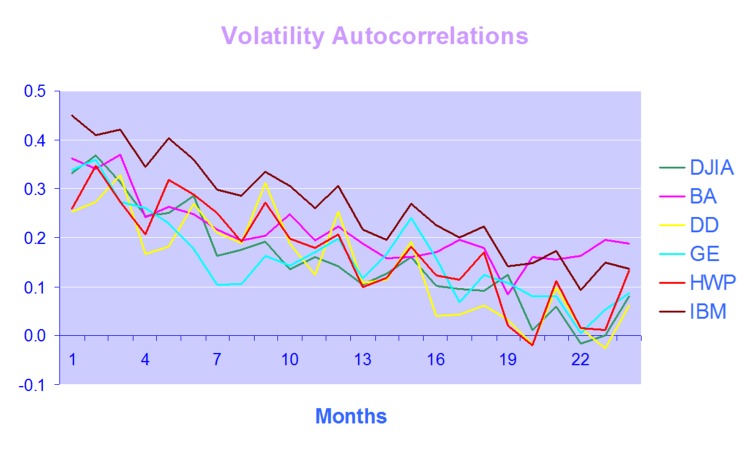

One of the defining characteristics of volatility processes in general (not just financial assets) is the tendency for the serial autocorrelations to decline very slowly. This effect is illustrated quite clearly in the chart below, which maps the autocorrelations in the volatility processes of several financial assets.

Thus we can say that events in the volatility process for IBM, for instance, continue to exert influence on the process almost two years later.

This feature in one that is typical of a black noise process – not some kind of rap music variant, but rather:

“a process with a 1/fβ spectrum, where β > 2 (Manfred Schroeder, “Fractals, chaos, power laws“). Used in modeling various environmental processes. Is said to be a characteristic of “natural and unnatural catastrophes like floods, droughts, bear markets, and various outrageous outages, such as those of electrical power.” Further, “because of their black spectra, such disasters often come in clusters.”” [Wikipedia].

Because of these autocorrelations, black noise processes tend to reinforce or trend, and hence (to some degree) may be forecastable. This contrasts with a white noise process, such as an asset return process, which has a uniform power spectrum, insignificant serial autocorrelations and no discernable trending behavior:

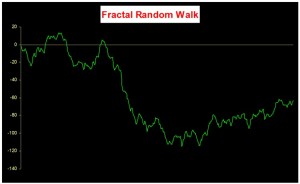

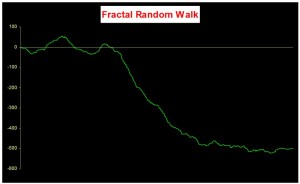

An econometrician might describe this situation by saying that a black noise process is fractionally integrated order d, where d = H/2, H being the Hurst Exponent. A way to appreciate the difference in the behavior of a black noise process vs. a white process is by comparing two fractionally integrated random walks generated using the same set of quasi random numbers by Feder’s (1988) algorithm (see p 32 of the presentation on Modeling Asset Volatility).

As you can see. both random walks follow a similar pattern, but the black noise random walk is much smoother, and the downward trend is more clearly discernible. You can play around with the Feder algorithm, which is coded in the accompanying Excel Workbook on Volatility and Nonlinear Dynamics . Changing the Hurst Exponent parameter H in the worksheet will rerun the algorithm and illustrate a fractal random walk for a black noise (H > 0.5), white noise (H=0.5) and mean-reverting, pink noise (H<0.5) process.

One way of modeling the kind of behavior demonstrated by volatility process is by using long memory models such as ARFIMA and FIGARCH (see pp 47-62 of the Modeling Asset Volatility presentation for a discussion and comparison of various long memory models). The article reviews research into long memory behavior and various techniques for estimating long memory models and the coefficient of fractional integration d for a process.

But long memory is not the only possible cause of long term serial correlation. The same effect can result from structural breaks in the process, which can produce spurious autocorrelations. The article goes on to review some of the statistical procedures that have been developed to detect regime shifts, due to Bai (1997), Bai and Perron (1998) and the Iterative Cumulative Sums of Squares methodology due to Aggarwal, Inclan and Leal (1999). The article illustrates how the ICSS technique accurately identifies two changes of regimes in a synthetic GBM process.

In general, I have found the ICSS test to be a simple and highly informative means of gaining insight about a process representing an individual asset, or indeed an entire market. For example, ICSS detects regime shifts in the process for IBM around 1984 (the time of the introduction of the IBM PC), the automotive industry in the early 1980’s (Chrysler bailout), the banking sector in the late 1980’s (Latin American debt crisis), Asian sector indices in Q3 1997, the S&P 500 index in April 2000 and just about every market imaginable during the 2008 credit crisis. By splitting a series into pre- and post-regime shift sub-series and examining each segment for long memory effects, one can determine the cause of autocorrelations in the process. In some cases, Asian equity indices being one example, long memory effects disappear from the series, indicating that spurious autocorrelations were induced by a major regime shift during the 1997 Asian crisis. In most cases, however, long memory effects persist.

Excel Workbook on Volatility and Nonlinear Dynamics

There are several other topics from chaos theory and nonlinear dynamics covered in the workbook, including:

- Generation of the Sierpinski triangle

- Estimation of the Hurst Exponent in various series (Industrial Production, DJIA, S&P500)

- Logistic and Henon attractors

- Estimation of the fractal dimension and correlation integral for the S&P500 index

More on these issues in due course.

Modeling Asset Volatility

I am planning a series of posts on the subject of asset volatility and option pricing and thought I would begin with a survey of some of the central ideas. The attached presentation on Modeling Asset Volatility sets out the foundation for a number of key concepts and the basis for the research to follow.

Perhaps the most important feature of volatility is that it is stochastic rather than constant, as envisioned in the Black Scholes framework. The presentation addresses this issue by identifying some of the chief stylized facts about volatility processes and how they can be modelled. Certain characteristics of volatility are well known to most analysts, such as, for instance, its tendency to “cluster” in periods of higher and lower volatility. However, there are many other typical features that are less often rehearsed and these too are examined in the presentation.

Long Memory

For example, while it is true that GARCH models do a fine job of modeling the clustering effect they typically fail to capture one of the most important features of volatility processes – long term serial autocorrelation. In the typical GARCH model autocorrelations die away approximately exponentially, and historical events are seen to have little influence on the behaviour of the process very far into the future. In volatility processes that is typically not the case, however: autocorrelations die away very slowly and historical events may continue to affect the process many weeks, months or even years ahead.

There are two immediate and very important consequences of this feature. The first is that volatility processes will tend to trend over long periods – a characteristic of Black Noise or Fractionally Integrated processes, compared to the White Noise behavior that typically characterizes asset return processes. Secondly, and again in contrast with asset return processes, volatility processes are inherently predictable, being conditioned to a significant degree on past behavior. The presentation considers the fractional integration frameworks as a basis for modeling and forecasting volatility.

Mean Reversion vs. Momentum

A puzzling feature of much of the literature on volatility is that it tends to stress the mean-reverting behavior of volatility processes. This appears to contradict the finding that volatility behaves as a reinforcing process, whose long-term serial autocorrelations create a tendency to trend. This leads to one of the most important findings about asset processes in general, and volatility process in particular: i.e. that the assets processes are simultaneously trending and mean-reverting. One way to understand this is to think of volatility, not as a single process, but as the superposition of two processes: a long term process in the mean, which tends to reinforce and trend, around which there operates a second, transient process that has a tendency to produce short term spikes in volatility that decay very quickly. In other words, a transient, mean reverting processes inter-linked with a momentum process in the mean. The presentation discusses two-factor modeling concepts along these lines, and about which I will have more to say later.

Cointegration

One of the most striking developments in econometrics over the last thirty years, cointegration is now a principal weapon of choice routinely used by quantitative analysts to address research issues ranging from statistical arbitrage to portfolio construction and asset allocation. Back in the late 1990’s I and a handful of other researchers realized that volatility processes exhibited very powerful cointegration tendencies that could be harnessed to create long-short volatility strategies, mirroring the approach much beloved by equity hedge fund managers. In fact, this modeling technique provided the basis for the Caissa Capital volatility fund, which I founded in 2002. The presentation examines characteristics of multivariate volatility processes and some of the ideas that have been proposed to model them, such as FIGARCH (fractionally-integrated GARCH).

Dispersion Dynamics

Finally, one topic that is not considered in the presentation, but on which I have spent much research effort in recent years, is the behavior of cross-sectional volatility processes, which I like to term dispersion. It turns out that, like its univariate cousin, dispersion displays certain characteristics that in principle make it highly forecastable. Given an appropriate model of dispersion dynamics, the question then becomes how to monetize efficiently the insight that such a model offers. Again, I will have much more to say on this subject, in future.