Metal Logic

Precious metals have been in free-fall for several years, as a consequence of the Fed’s actions to stimulate the economy that have also had the effect of goosing the equity and fixed income markets. All that changed towards the end of 2015, as the Fed moved to a tightening posture. So far, 2016 has been a banner year for metal, with spot prices for platinum, gold and silver up 26%, 28% and 44% respectively.

So what are the prospects for metals through the end of the year? We take a shot at predicting the outcome, from a quantitative perspective.

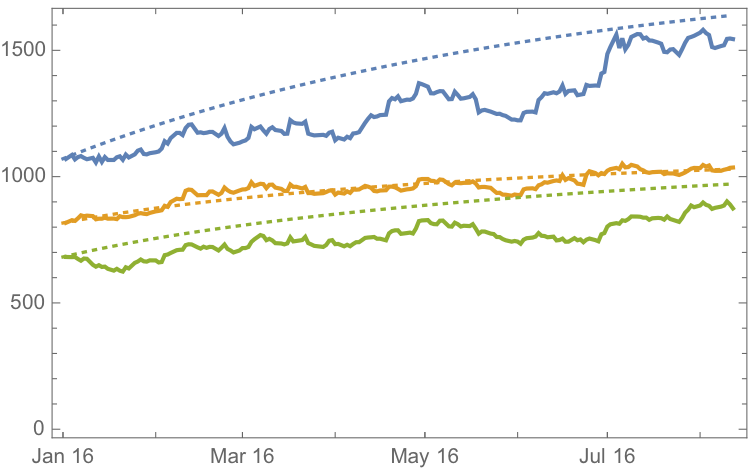

Source: Wolfram Alpha. Spot silver prices are scaled x100

Metals as Correlated Processes

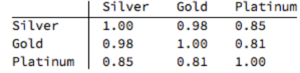

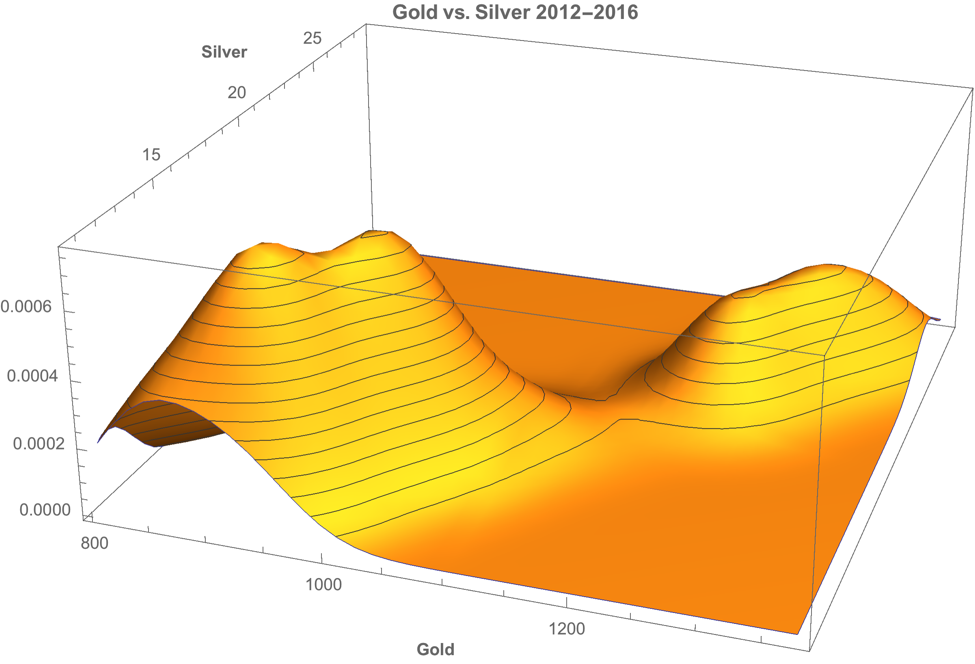

One of the key characteristics of metals is the very high levels of price-correlation between them. Over the period under investigation, Jan 2012 to Aug 2016, the estimated correlation coefficients are as follows:

A plot of the join density of spot gold and silver prices indicates low- and high-price regimes in which the metals display similar levels of linear correlation.

Simple Metal Trading Models

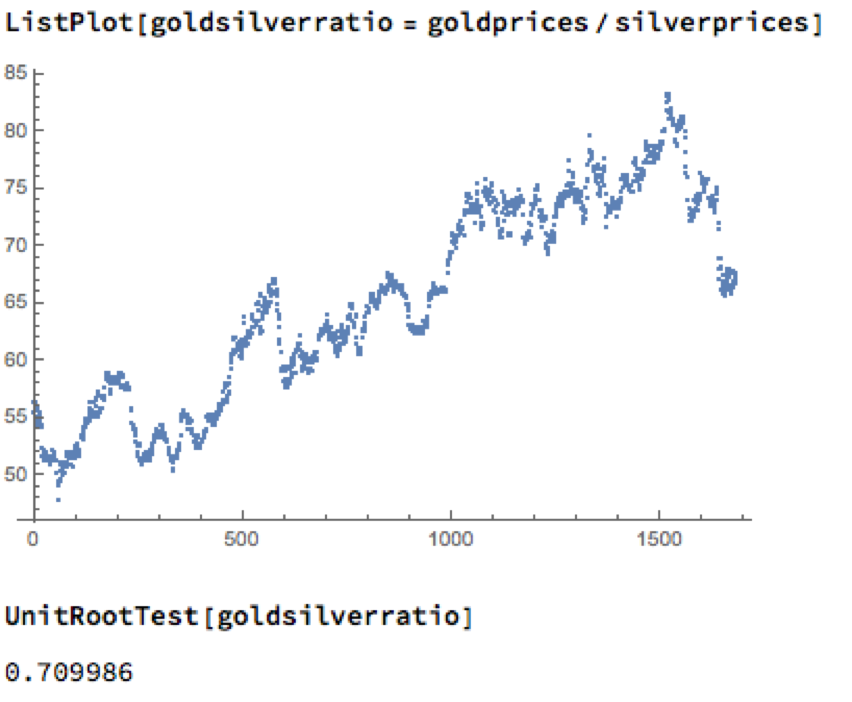

Levels of correlation that are consistently as high as this over extended periods of time are fairly unusual in financial markets and this presents a potential trading opportunity. One common approach is to use the ratios of metal prices as a trading signal. However, taking the ratio of gold to silver spot prices as an example, a plot of the series demonstrates that it is highly unstable and susceptible to long term trends.

A more formal statistical test fails to reject the null hypothesis of a unit root. In simple terms, this means we cannot reliably distinguish between the gold/silver price ratio and a random walk.

Along similar lines, we might consider the difference in log prices of the series. If this proved to be stationary then the log-price series would be cointegrated order 1 and we could build a standard pairs trading model to buy or sell the spread when prices become too far unaligned. However, we find once again that the log-price difference can wander arbitrarily far from its mean, and we are unable to reject the null hypothesis that the series contains a unit root.

Linear Models

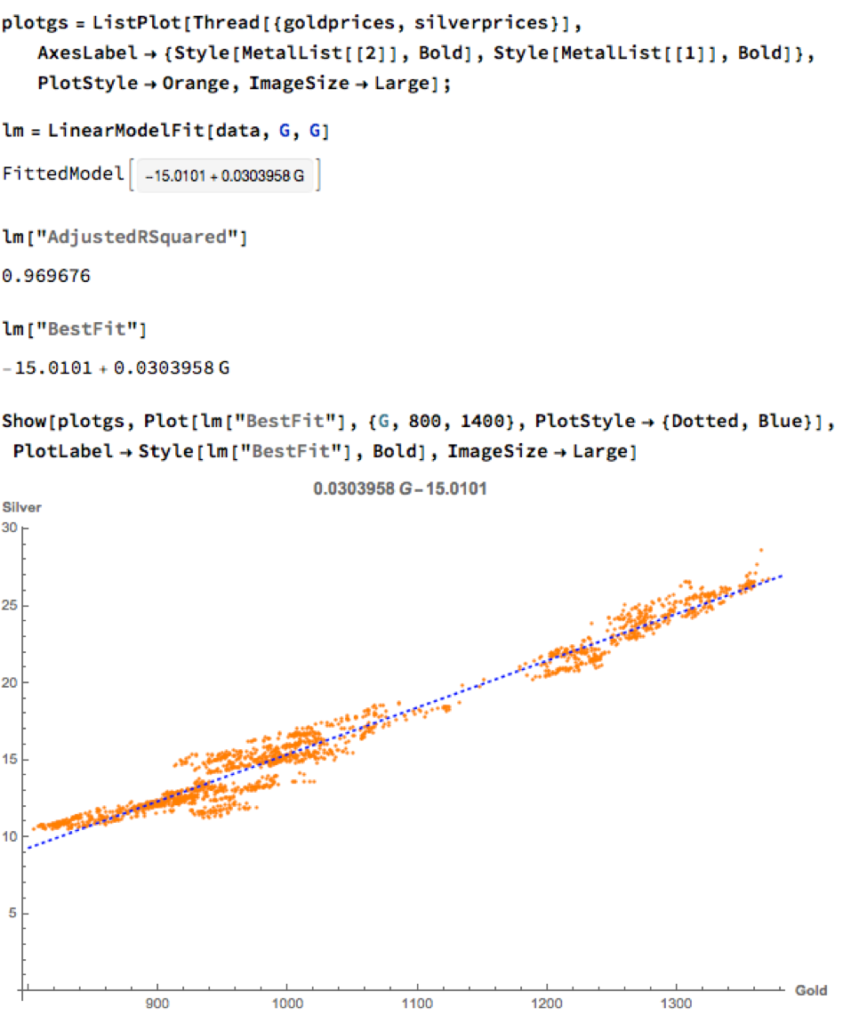

We can hope to do better with a standard linear model, regressing spot silver prices against spot gold prices. The fit of the best linear model is very good, with an R-sq of over 96%:

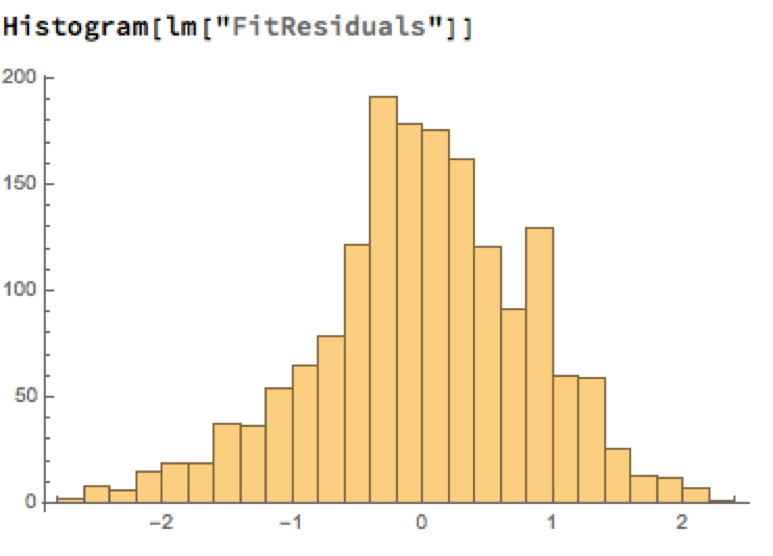

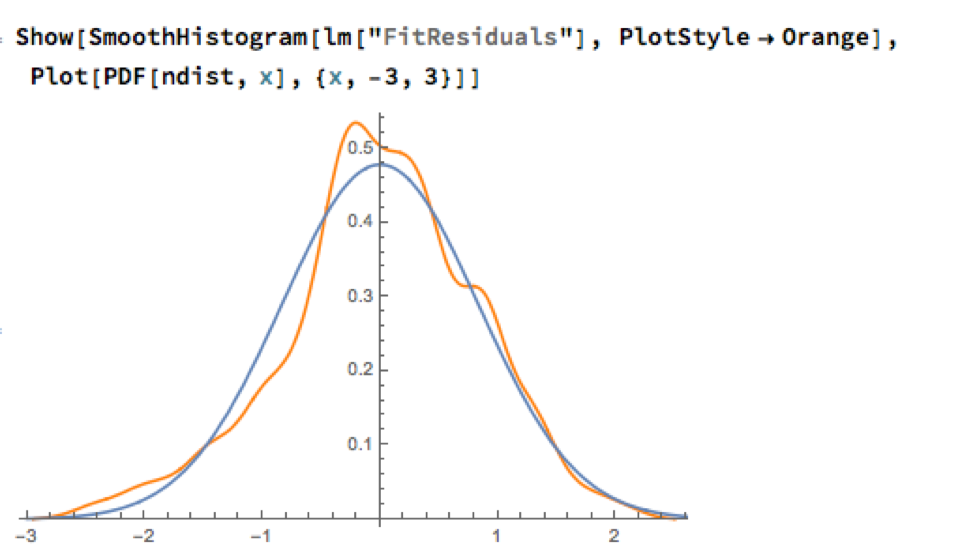

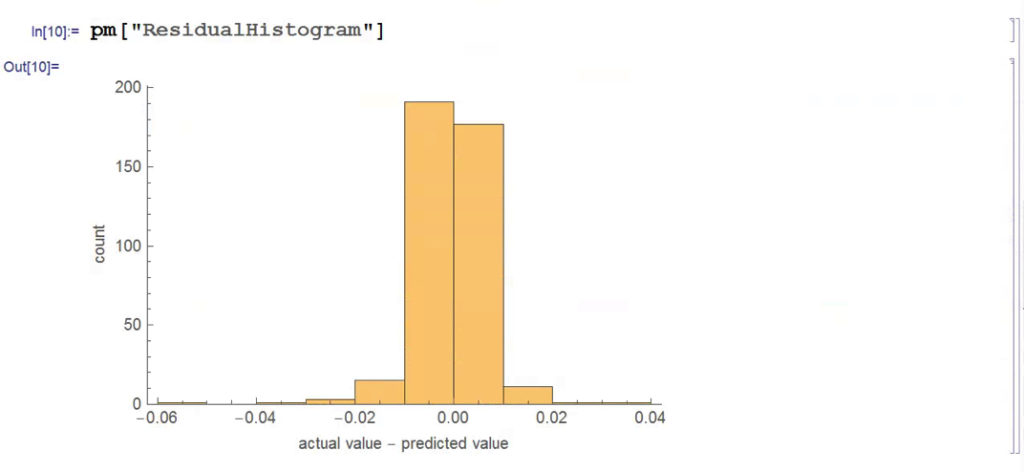

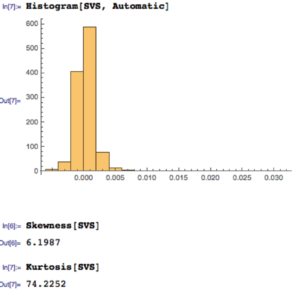

A trader might look to exploit the correlation relationship by selling silver when its market price is greater than the value estimated by the model (and buying when the model price exceeds the market price). Typically the spread is bought or sold when the log-price differential exceeds a threshold level that is set at twice the standard deviation of the price-difference series. The threshold levels derive from the assumption of Normality, which in fact does not apply here, as we can see from an examination of the residuals of the linear model:

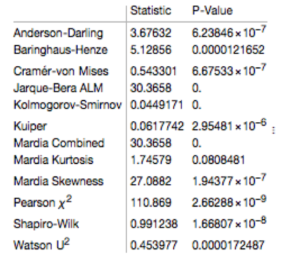

Given the evident lack of fit, especially in the left tail of the distribution, it is unsurprising that all of the formal statistical tests for Normality easily reject the null hypothesis:

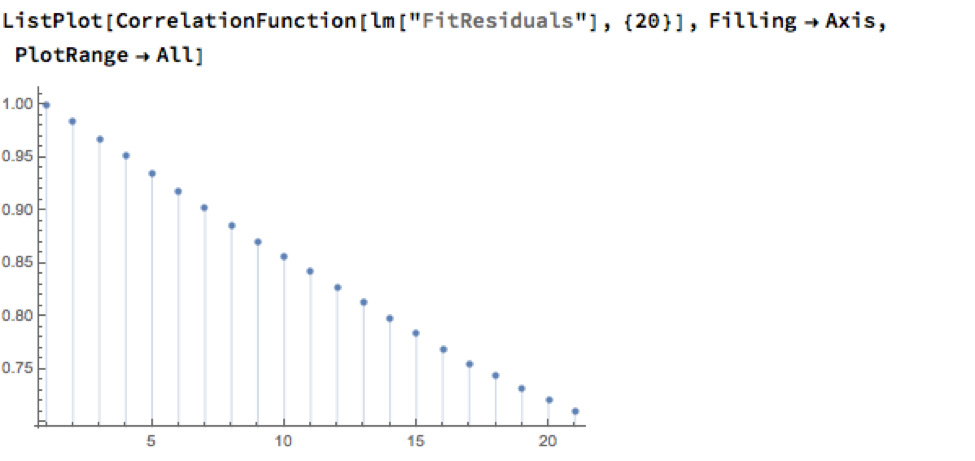

However, Normality, or the lack of it, is not the issue here: one could just as easily set the 2.5% and 97.5% percentiles of the empirical distribution as trade entry points. The real problem with the linear model is that it fails to take into account the time dependency in the price series. An examination of the residual autocorrelations reveals significant patterning, indicating that the model tends to under-or over-estimate the spot price of silver for long periods of time:

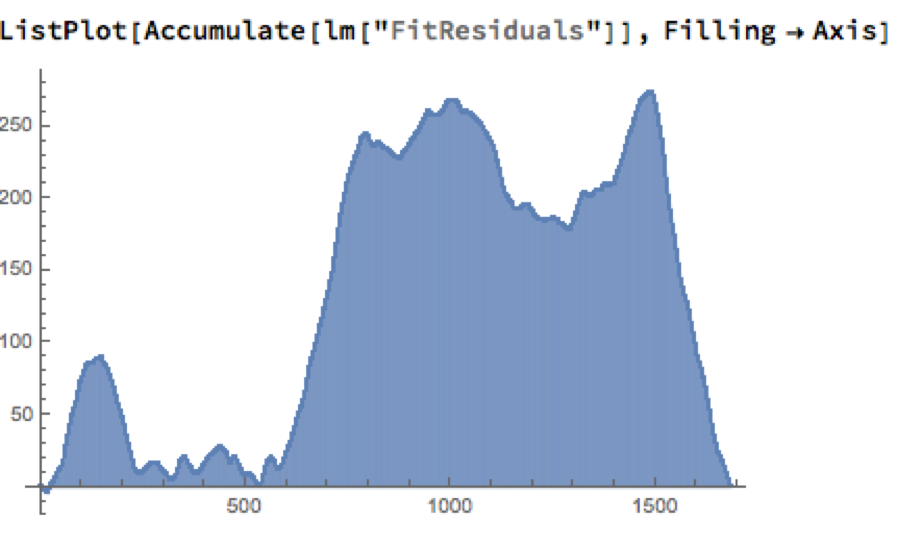

As the following chart shows, the cumulative difference between model and market prices can become very large indeed. A trader risks going bust waiting for the market to revert to model prices.

How does one remedy this? The shortcoming of the simple linear model is that, while it captures the interdependency between the price series very well, it fails to factor in the time dependency of the series. What is required is a model that will account for both features.

Multivariate Vector Autoregression Model

Rather than modeling the metal prices individually, or in pairs, we instead adopt a multivariate vector autoregression approach, modeling all three spot price processes together. The essence of the idea is that spot prices in each metal may be influenced, not only by historical values of the series, but also potentially by current and lagged prices of the other two metals.

Before proceeding we divide the data into two parts: an in-sample data set comprising data from 2012 to the end of 2015 and an out-of-sample period running from Jan-Aug 2016, which we use for model testing purposes. In what follows, I make the simplifying assumption that a vector autoregressive moving average process of order (1, 1) will suffice for modeling purposes, although in practice one would go through a procedure to test a wide spectrum of possible models incorporating moving average and autoregressive terms of varying dimensions.

In any event, our simplified VAR model is estimated as follows:

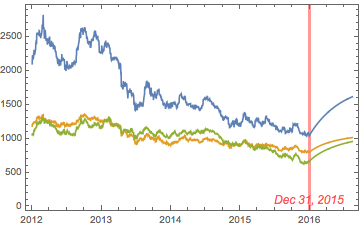

The chart below combines the actual, in-sample data from 2012-2015, together with the out-of-sample forecasts for each spot metal from January 2016.

It is clear that the model projects a recovery in spot metal prices from the end of 2015. How did the forecasts turn out? In the chart below we compare the actual spot prices with the model forecasts, over the period from Jan to Aug 2016.

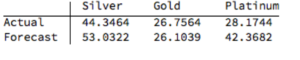

The actual and forecast percentage change in the spot metal prices over the out-of-sample period are as follows:

The VAR model does a good job of forecasting the strong upward trend in metal prices over the first eight months of 2016. It performs exceptionally well in its forecast of gold prices, although its forecasts for silver and platinum are somewhat over-optimistic. Nevertheless, investors would have made money taking a long position in any of the metals on the basis of the model projections.

Relative Value Trade

Another way to apply the model would be to implement a relative value trade, based on the model’s forecast that silver would outperform gold and platinum. Indeed, despite the model’s forecast of silver prices turning out to be over-optimistic, a relative value trade in silver vs. gold or platinum would have performed well: silver gained 44% in the period form Jan-Aug 2016, compared to only 26% for gold and 28% for platinum. A relative value trade entailing a purchase of silver and simultaneous sale of gold or platinum would have produced a gross return of 17% and 15% respectively.

A second relative value trade indicated by the model forecasts, buying platinum and selling gold, would have turned out less successfully, producing a gross return of less than 2%. We will examine the reasons for this in the next section.

Forecasts and Trading Opportunities Through 2016

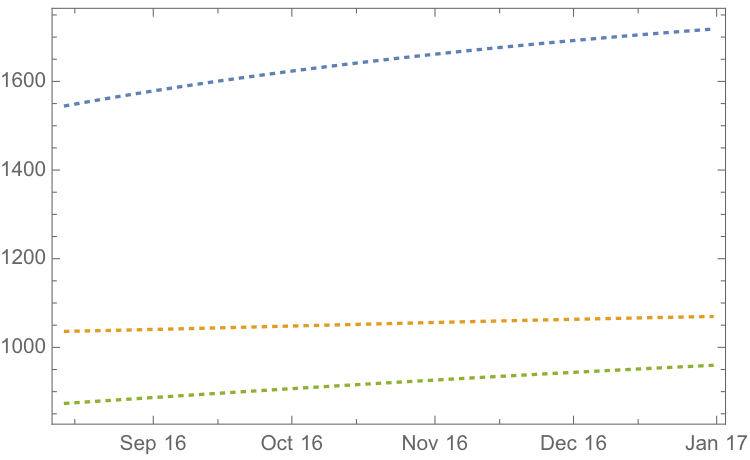

If we re-estimate the VAR model using all of the the available data through mid-Aug 2016 and project metal prices through the end of the year, the outcome is as follows:

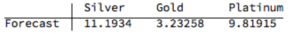

While the positive trend in all three metals is forecast to continue, the new model (which incorporates the latest data) anticipates lower percentage rates of appreciation going forward:

Once again, the model predicts higher rates of appreciation for both silver and platinum relative to gold. So investors have the option to take a relative value trade, hedging a long position in silver or platinum with a short position in gold. While the forecasts for all three metals appear reasonable, the projections for platinum strike me as the least plausible.

The reason is that the major applications of platinum are industrial, most often as a catalyst: the metal is used as a catalytic converter in automobiles and in the chemical process of converting naphthas into higher-octane gasolines. Although gold is also used in some industrial applications, its demand is not so driven by industrial uses. Consequently, during periods of sustained economic stability and growth, the price of platinum tends to be as much as twice the price of gold, whereas during periods of economic uncertainty, the price of platinum tends to decrease due to reduced industrial demand, falling below the price of gold. Gold prices are more stable in slow economic times, as gold is considered a safe haven.

This is the most likely explanation of why the gold-platinum relative value trade has not worked out as expected hitherto and is perhaps unlikely to do so in the months ahead, as the slowdown in the global economy continues.

Conclusion

We have shown that simple models of the ratio or differential in the prices of precious metals are unlikely to provide a sound basis for forecasting or trading, due to non-stationarity and/or temporal dependencies in the residuals from such models.

On the other hand, a vector autoregression model that models all three price processes simultaneously, allowing both cross correlations and autocorrelations to be captured, performs extremely well in terms of forecast accuracy in out-of-sample tests over the period from Jan-Aug 2016.

Looking ahead over the remainder of the year, our updated VAR model predicts a continuation of the price appreciation, albeit at a slower rate, with silver and platinum expected to continue outpacing gold. There are reasons to doubt whether the appreciation of platinum relative to gold will materialize, however, due to falling industrial demand as the global economy cools.

Dynamic Time Warping

Machine Learning Trading Systems

The SPDR S&P 500 ETF (SPY) is one of the widely traded ETF products on the market, with around $200Bn in assets and average turnover of just under 200M shares daily. So the likelihood of being able to develop a money-making trading system using publicly available information might appear to be slim-to-none. So, to give ourselves a fighting chance, we will focus on an attempt to predict the overnight movement in SPY, using data from the prior day’s session.

In addition to the open/high/low and close prices of the preceding day session, we have selected a number of other plausible variables to build out the feature vector we are going to use in our machine learning model:

- The daily volume

- The previous day’s closing price

- The 200-day, 50-day and 10-day moving averages of the closing price

- The 252-day high and low prices of the SPY series

We will attempt to build a model that forecasts the overnight return in the ETF, i.e. [O(t+1)-C(t)] / C(t)

In this exercise we use daily data from the beginning of the SPY series up until the end of 2014 to build the model, which we will then test on out-of-sample data running from Jan 2015-Aug 2016. In a high frequency context a considerable amount of time would be spent evaluating, cleaning and normalizing the data. Here we face far fewer problems of that kind. Typically one would standardized the input data to equalize the influence of variables that may be measured on scales of very different orders of magnitude. But in this example all of the input variables, with the exception of volume, are measured on the same scale and so standardization is arguably unnecessary.

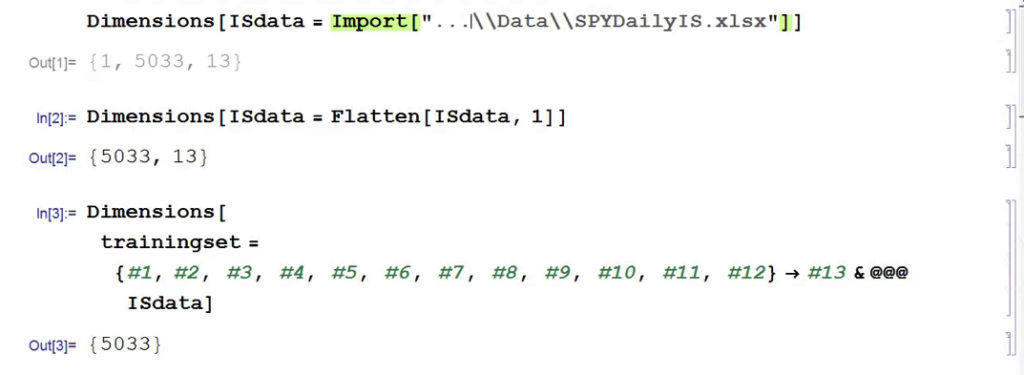

First, the in-sample data is loaded and used to create a training set of rules that map the feature vector to the variable of interest, the overnight return:

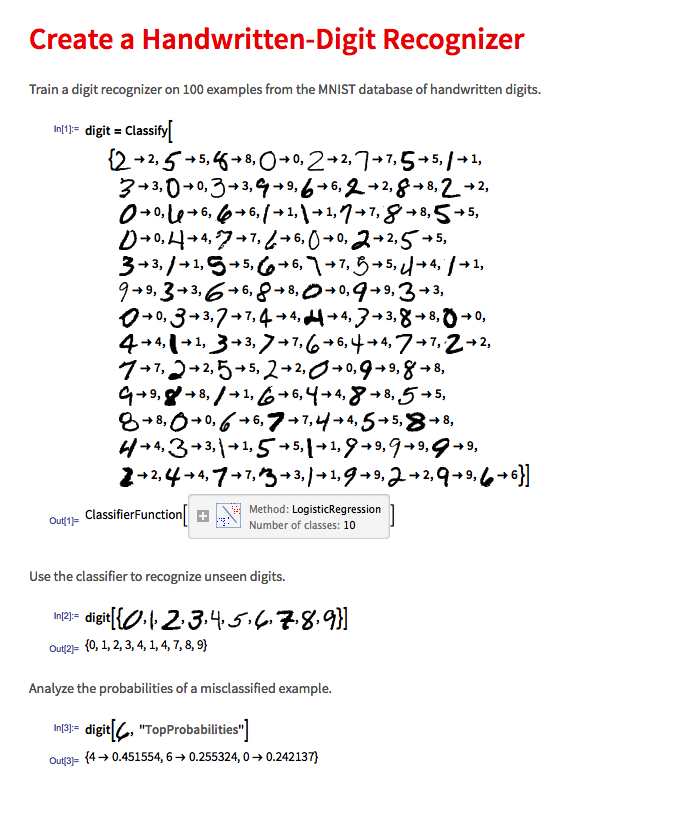

In Mathematica 10 Wolfram introduced a suite of machine learning algorithms that include regression, nearest neighbor, neural networks and random forests, together with functionality to evaluate and select the best performing machine learning technique. These facilities make it very straightfoward to create a classifier or prediction model using machine learning algorithms, such as this handwriting recognition example:

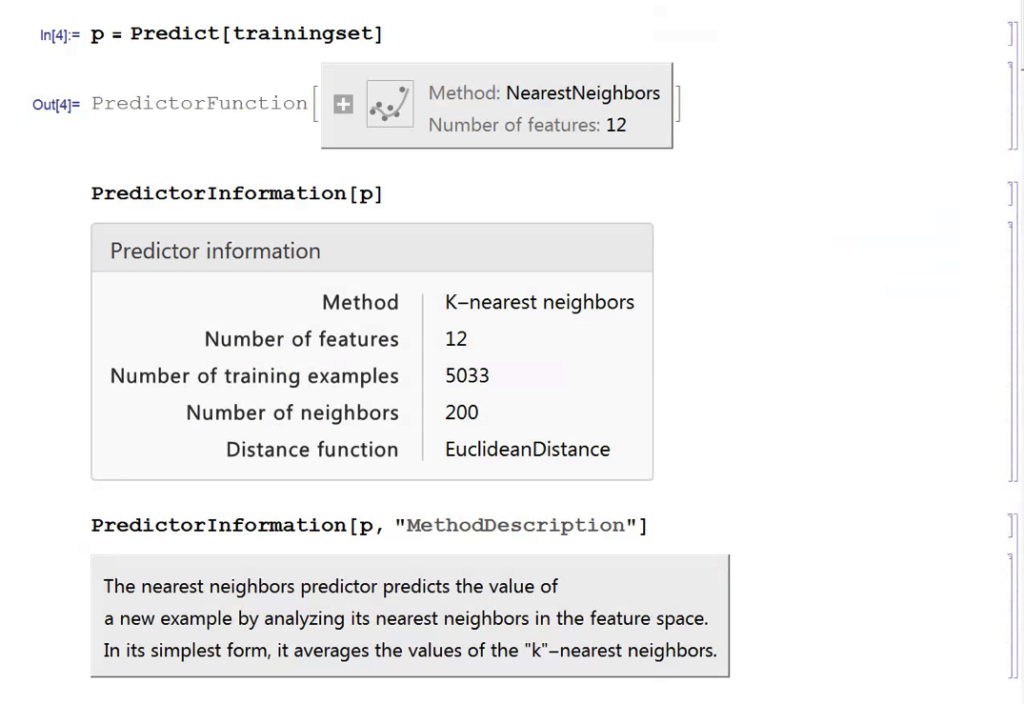

We create a predictive model on the SPY trainingset, allowing Mathematica to pick the best machine learning algorithm:

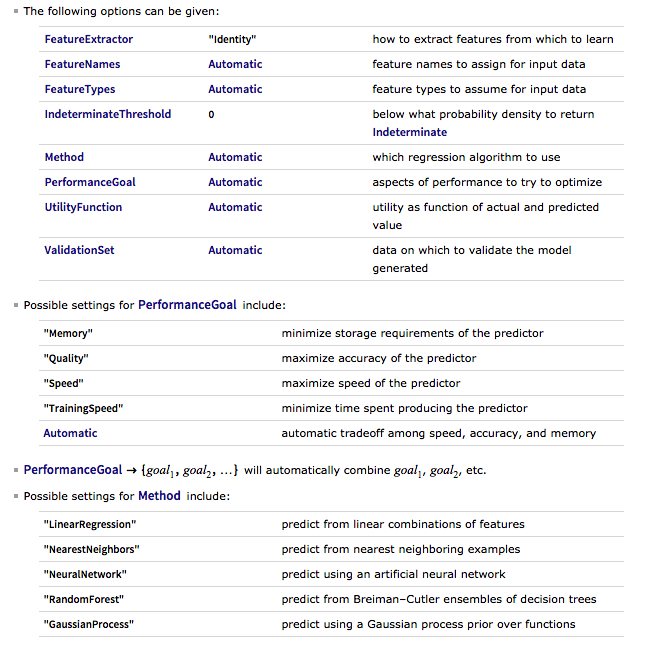

There are a number of options for the Predict function that can be used to control the feature selection, algorithm type, performance type and goal, rather than simply accepting the defaults, as we have done here:

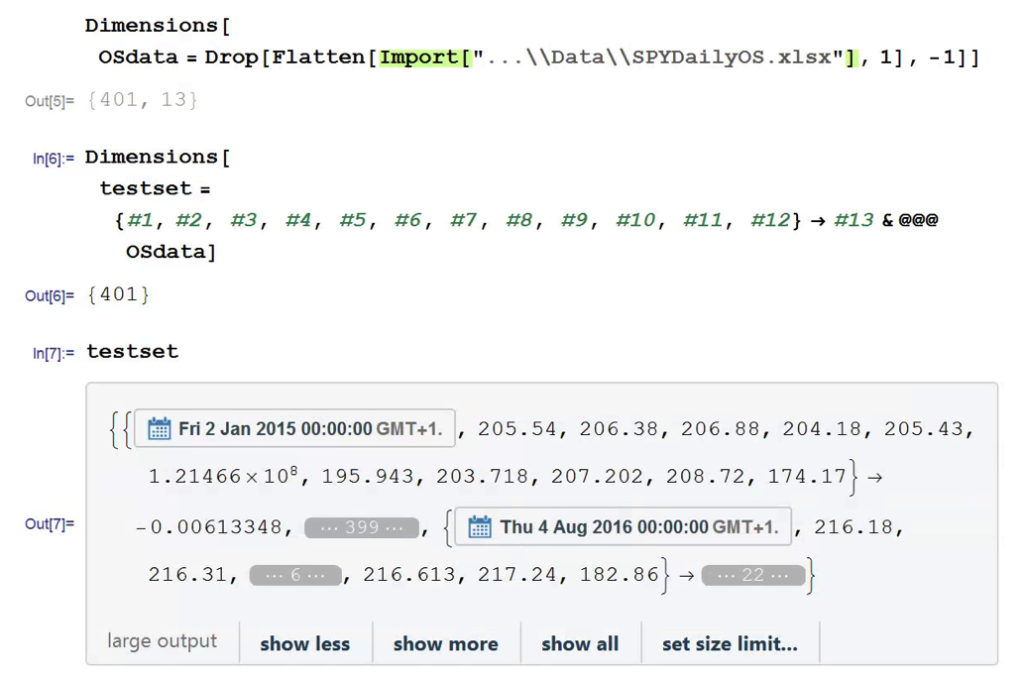

Having built our machine learning model, we load the out-of-sample data from Jan 2015 to Aug 2016, and create a test set:

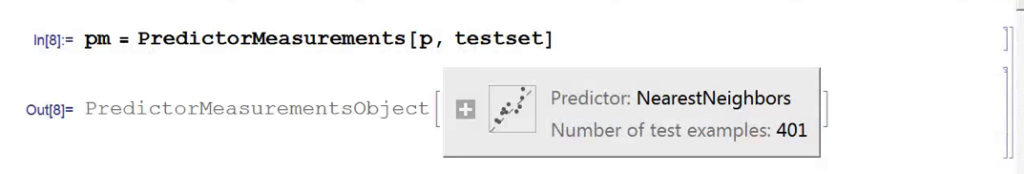

We next create a PredictionMeasurement object, using the Nearest Neighbor model , that can be used for further analysis:

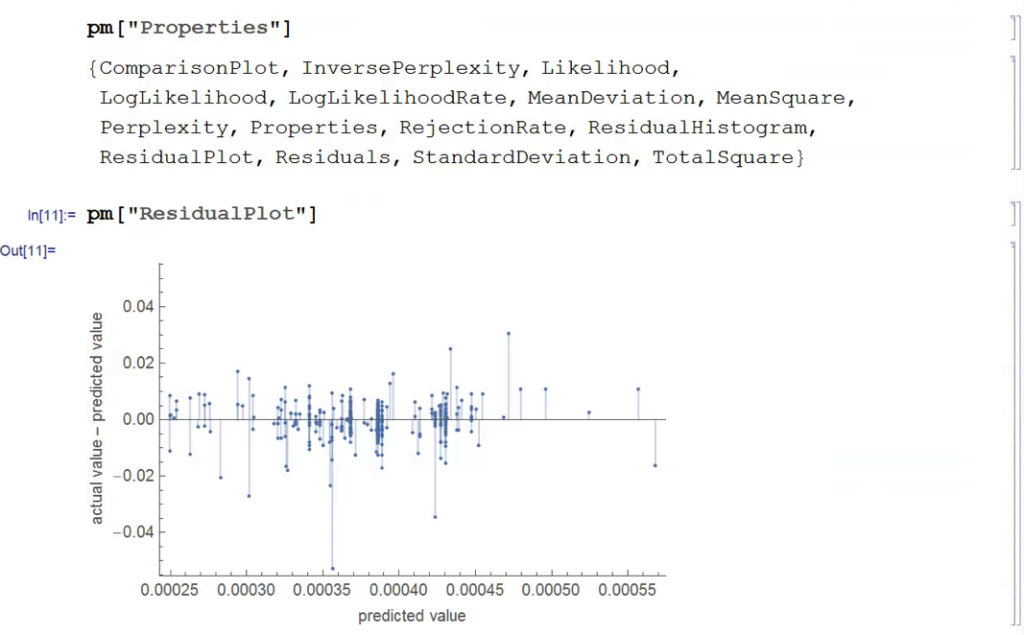

There isn’t much dispersion in the model forecasts, which all have positive value. A common technique in such cases is to subtract the mean from each of the forecasts (and we may also standardize them by dividing by the standard deviation).

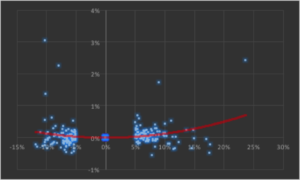

The scatterplot of actual vs. forecast overnight returns in SPY now looks like this:

There’s still an obvious lack of dispersion in the forecast values, compared to the actual overnight returns, which we could rectify by standardization. In any event, there appears to be a small, nonlinear relationship between forecast and actual values, which holds out some hope that the model may yet prove useful.

From Forecasting to Trading

There are various methods of deploying a forecasting model in the context of creating a trading system. The simplest route, which we will take here, is to apply a threshold gate and convert the filtered forecasts directly into a trading signal. But other approaches are possible, for example:

- Combining the forecasts from multiple models to create a prediction ensemble

- Using the forecasts as inputs to a genetic programming model

- Feeding the forecasts into the input layer of a neural network model designed specifically to generate trading signals, rather than forecasts

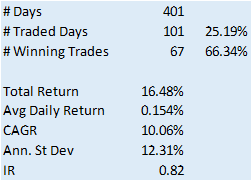

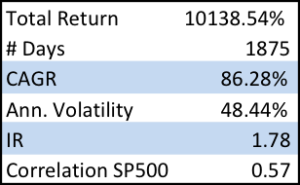

In this example we will create a trading model by applying a simple filter to the forecasts, picking out only those values that exceed a specified threshold. This is a standard trick used to isolate the signal in the model from the background noise. We will accept only the positive signals that exceed the threshold level, creating a long-only trading system. i.e. we ignore forecasts that fall below the threshold level. We buy SPY at the close when the forecast exceeds the threshold and exit any long position at the next day’s open. This strategy produces the following pro-forma results:

Conclusion

The system has some quite attractive features, including a win rate of over 66% and a CAGR of over 10% for the out-of-sample period.

Obviously, this is a very basic illustration: we would want to factor in trading commissions, and the slippage incurred entering and exiting positions in the post- and pre-market periods, which will negatively impact performance, of course. On the other hand, we have barely begun to scratch the surface in terms of the variables that could be considered for inclusion in the feature vector, and which may increase the explanatory power of the model.

In other words, in reality, this is only the beginning of a lengthy and arduous research process. Nonetheless, this simple example should be enough to give the reader a taste of what’s involved in building a predictive trading model using machine learning algorithms.

The Swan of Deadwood

Spending 12-14 hours a day managing investors’ money doesn’t leave me a whole lot of time to sit around watching TV. And since I have probably less than 10% of the ad-tolerance of a typical American audience member, I inevitably turn to TiVo, Netflix, or similar, to watch a commercial-free show. Which means that I am inevitably several years behind the cognoscenti of the au-courant. This has its pluses: I avoid a lot of drivel that way.

So it was that I recently tuned in to watch Deadwood, a masterpiece of modern drama written by the talented David Milch, of NYPD Blue fame. The setting of the show is unpromising: a mud-caked camp in South Dakota around the turn of the 19th century that appears to portend yet another formulaic Western featuring liquor, guns, gals and gold and not much else. The first episode appeared at first to confirm my lowest expectations. I struggled through the second. But by the third I was hooked.

What makes Deadwood such a triumph are its finely crafted plots and intricate sub-plots; the many varied and often complex characters, superbly played by Ian McShane (with outstanding performances by Brad Dourif, Powers Boothe, amongst an abundance of others, no less gifted); and, of course, the dialogue.

Yes, the dialogue: hardly the crowning glory of the typical Hollywood Western. And here, to make matters worse, almost every sentence uttered by many of the characters is replete with such shocking profanity that one is eventually numbed into accepting it as normal. But once you get past that, something strange and rather wonderful overtakes you: a sense of being carried along on a river of creative wordsmith-ing that at times eddies, bubbles, plunges and roars its way through scenes that are as comedic, dramatic and action-packed as any I have seen on film. For those who have yet to enjoy the experience, I offer one small morsel:

https://www.youtube.com/watch?v=RdJ4TQ3TnNo

Milch as Shakespeare?

Around the start of Series 2 a rather strange idea occurred to me that, try as I might, I was increasingly unable to suppress as the show progressed: that the writing – some of it at least – was almost Shakespearian in its ingenuity and, at times, lyrical complexity.

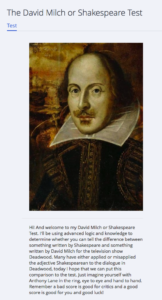

Convinced that I had taken leave of my senses I turned to Google and discovered, to my surprise, that there is a whole cottage industry of Deadwood fans who had made the same connection. There is even – if you can imagine it – an online quiz that tests if you are able to identify the source of a number of quotes that might come from the show, or one of the Bard’s many plays. I kid you not:

Intrigued, I took the test and scored around 85%. Not too bad for a science graduate, although I expect most English majors would top 90%-95%. That gave me an idea: could one develop a machine learning algorithm to do the job?

Here’s how it went.

Milch or Shakespeare? – A Machine Learning Classification Algorithm

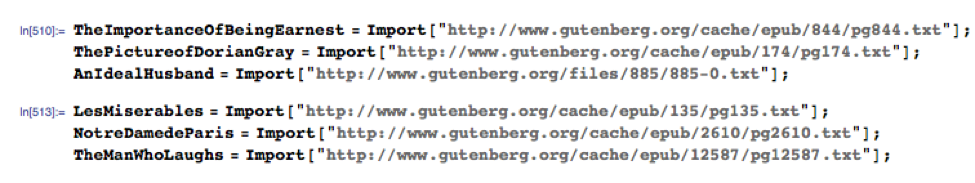

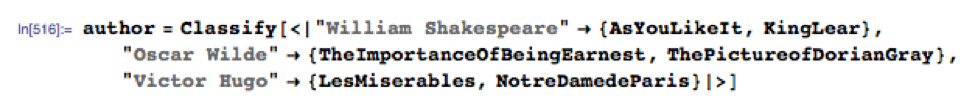

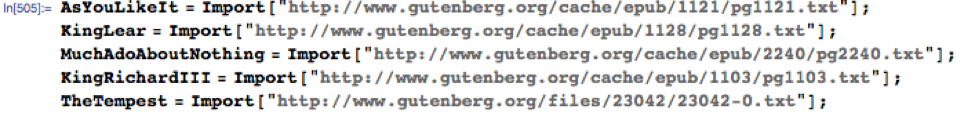

We start by downloading the text of a representative selection of Shakespeare’s plays, avoiding several of the better-known works from which many of the quotations derive:

For testing purposes, let’s download a sample of classic works by other authors:

For testing purposes, let’s download a sample of classic works by other authors:

Let’s build an initial test classifier, as follows:

It seems to work ok:

So far so good. Let’s import the script for Deadwood series 1-3:

DeadWood = Import[“……../Dropbox/Documents/Deadwood-Seasons-1-3-script.txt”];

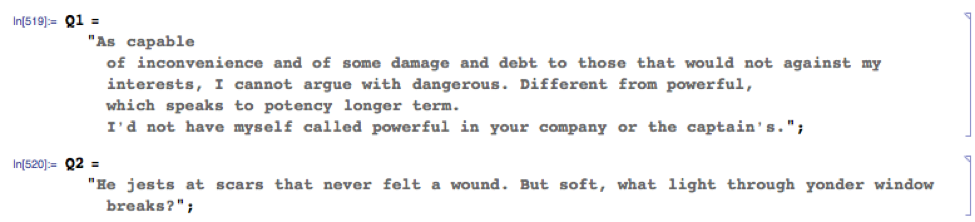

Next, let’s import the quotations used in the online test:

etc

We need to remove the relevant quotes from the Deadwood script file used to train the classifier, of course (otherwise it’s cheating!). We will strip an additional 200 characters before the start of each quotation, and 500 characters after each quotation, just for good measure:

and so on….

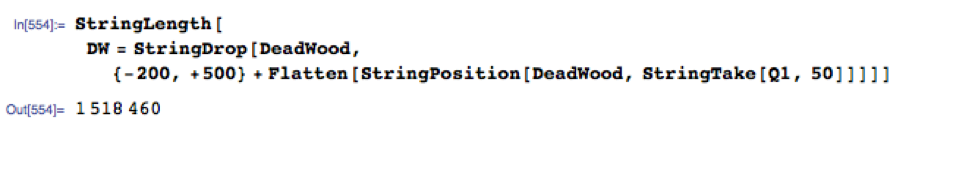

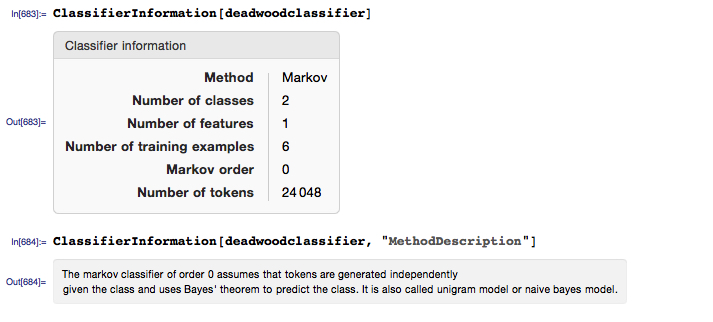

Now we are ready to build our classifier:

And we can obtain some information about the classifier, as follows:

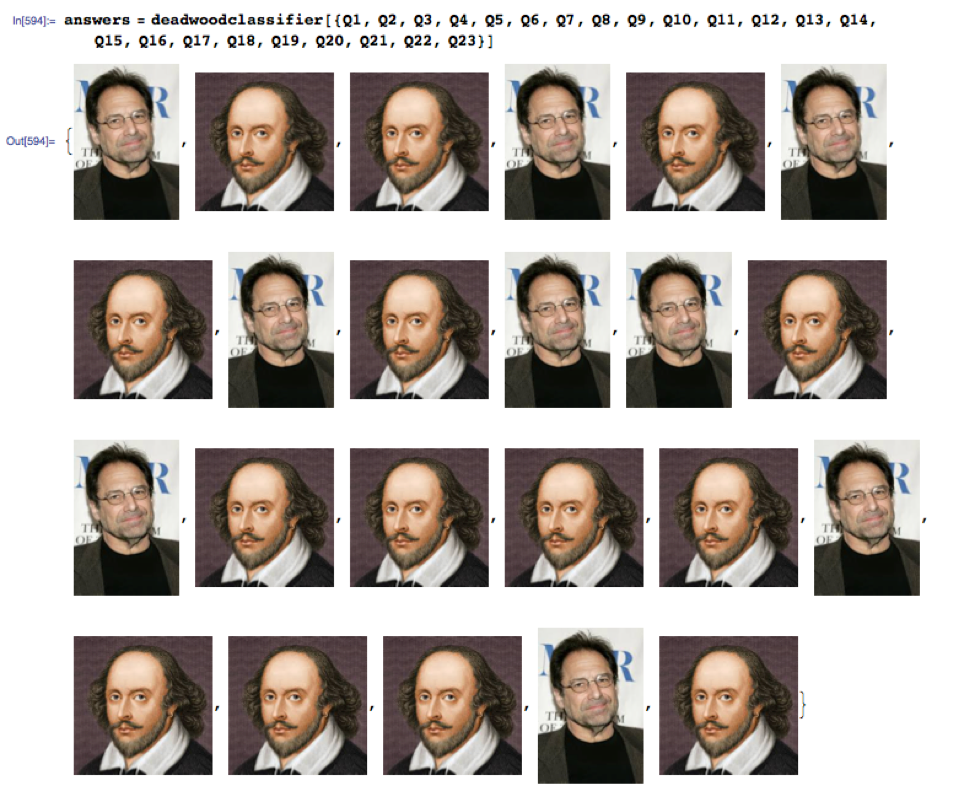

Let’s see how it performs:

Or, if you prefer tabular form:

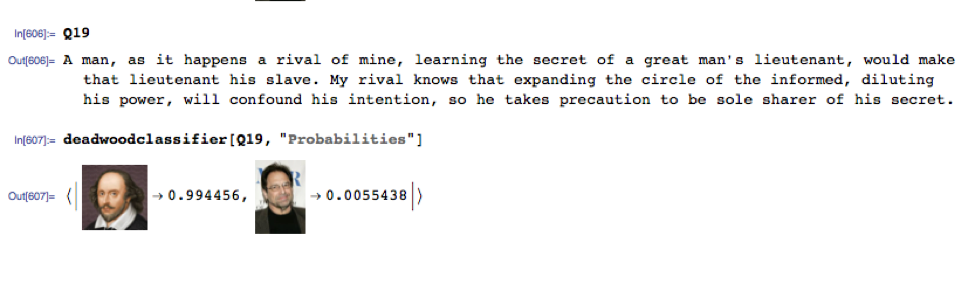

The machine learning model scored a total of 19 correct answers out of 23, or 82%.

Fooling the Machine

Let’s take a look as some of the questions the algorithm got wrong.

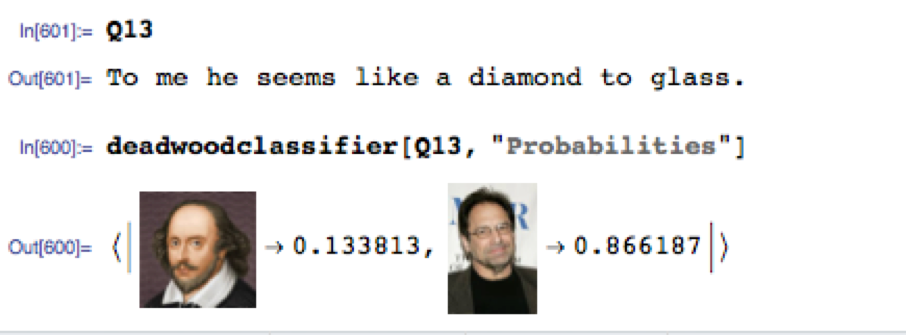

Quotation no. 13 is challenging, as it comes from Pericles, one of Shakespeare’s lesser-know plays and the idiom appears entirely modern. The classifier assigns an 87% probability of Milch being the author (I got it wrong too).

On Quotation no. 15 the algorithm was just as mistaken, but in the other direction (I got this one right, but only because I recalled the monologue from the episode):

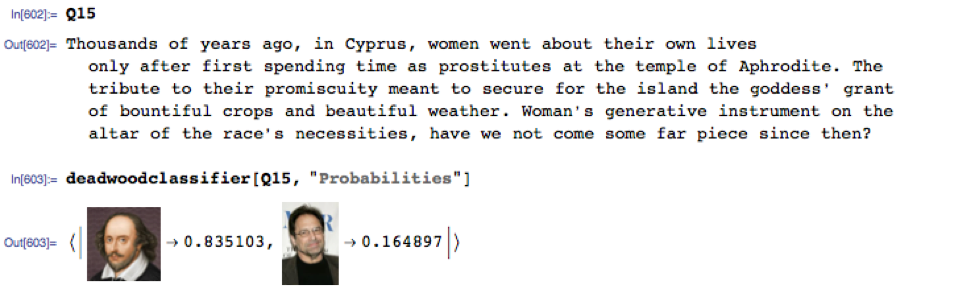

Quotation no.16 strikes me as entirely Shakespearian in form and expression and the classifier thought so too, favoring the Bard by 86% to only 14% for Milch:

Quotation no. 19 had the algorithm fooled completely. It’s a perfect illustration of a typical kind of circumlocution favored by Shakespeare that is imitated so well by Milch’s Deadwood characters:

Conclusion

The model clearly picked up distinguishing characteristics of the two authors’ writings that enabled it to correctly classify 82% of the quotations, quite a high percentage and much better than we would expect to do by tossing a coin, for example. It’s a respectable performance, but I might have hoped for greater accuracy from the model, which scored about the same as I did.

I guess those who see parallels in the writing of William Shakespeare and David Milch may be onto something.

Postscript

The Hollywood Reporter recently published a story entitled

“How the $100 Million ‘NYPD Blue’ Creator Gambled Away His Fortune”.

It’s a fascinating account of the trials and tribulations of this great author, one worthy of Deadwood itself.

A silver lining to this tragic tale, perhaps, is that Milch’s difficulties may prompt him into writing the much-desired Series 4.

One can hope.

Outperforming Winton Capital

Winton Capital Management is a renowned quant fund and one of the world’s largest, most successful CTAs. The firm’s flagship investment strategy, the Winton Diversified Program, follows a systematic investment process that is based on statistical research to invest globally long and short, using leverage, in a diversified range of liquid instruments, including exchange traded futures, forwards, currency forwards traded over the counter, equity securities and derivatives linked to such securities.

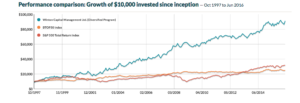

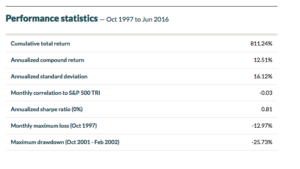

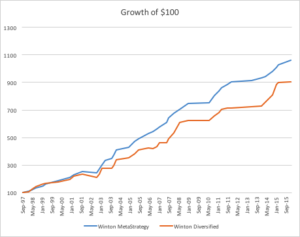

The performance of the program over the last 19 years has been impressive, especially considering its size, which now tops around $13Bn in assets.

Source: CTA Performance

A Meta-Strategy to Beat Winton Capital

With that background, the idea of improving the exceptional results achieved by David Harding and his army of quants seems rather far fetched, but I will take a shot. In what follows, I am assuming that we are permitted to invest and redeem an investment in the program at no additional cost, other than the stipulated fees. This is, of course, something of a stretch, but we will make that assumption based on the further stipulation that we will make no more than two such trades per year.

The procedure we will follow has been described in various earlier posts – in particular see this post, in which I discuss the process of developing a Meta-Strategy: Improving A Hedge Fund Investment – Cantab Capital’s Quantitative Aristarchus Fund

Using the performance data of the WDP from 1997-2012, we develop a meta-strategy that seeks to time an investment in the program, taking profits after reaching a specified profit target, which is based on the TrueRange, or after holding for a maximum of 8 months. The key part of the strategy code is as follows:

If MarketPosition = 1 then begin

TargPrL = EntryPrice + TargFr * TrueRange;

Sell(“ExTarg-L”) next bar at TargPrL limit;

If Time >= TimeEx or BarsSinceEntry >= NBarEx1 or (BarsSinceEntry >= NBarEx3 and C > EntryPrice)

or (BarsSinceEntry >= NBarEx2 and C < EntryPrice) then

Sell(“ExMark-L”) next bar at market;

end;

It appears that by timing an investment in the program we can improve the CAGR by around 0.86% per year, and with annual volatility that is lower by around 4.4% annually. As a consequence, the Sharpe ratio of the meta-strategy is considerably higher: 1.14 vs 0.78 for the WDP.

Like most trend-following CTA strategies, Winton’s WDP has positive skewness, an attractive feature that means that the strategy has a preponderance of returns in the positive right tail of the distribution. Also in common with most CTA strategies, on the other hand, the WDP suffers from periodic large drawdowns, in this case amounting to -25.73%.

The meta-strategy improves on the baseline profile of the WDP, increasing the positive skew, while substantially reducing downside risk, leading to a much lower maximum drawdown of -16.94%.

Conclusion

Despite its stellar reputation in the CTA world, investors could theoretically improve on the performance of Winton Capital’s flagship program by using a simple meta-strategy that times entry to and exit from the program using simple technical indicators. The meta-strategy produces higher returns, lower volatility and with higher positive skewness and lower downside risk.

Falling Water

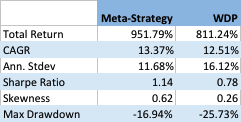

The current 15-year drought in the South West is the most severe since recordkeeping for the Colorado River began in 1906. Lake Mead, which supplies much of the water to Colorado Basin communities, is now more than half empty.

A 120 foot high band of rock, bleached white by the water, and known as the “bathtub ring” encircles the lake, a stark reminder of the water crisis that has enveloped the surrounding region. The Colorado River takes a 1,400 mile journey from the Rockies to Mexico, irrigating over 5 million acres of farmland in the Basin states of Wyoming, Utah, Colorado, New Mexico, Nevada, Arizona, and California.

The Colorado River Compact signed in 1922 enshrined the States’ water rights in law and Mexico was added to the roster in 1994, taking the total allocation to over 16.5 million acre-feet per year. But the average freshwater input to the lake over the century from 1906 to 2005 reached only 15 million acre-feet. The river can’t come close to meeting current demand and the problem is only likely to get worse. A 2009 study found that rainfall in the Colorado Basin could fall as much as 15% over the next 50 years and the shortfall in deliveries could reach 60% to 90% of the time.

Impact on Las Vegas

With an average of only 4 inches of rain a year, and a daily high temperatures of 103 o F during the summer, Las Vegas is perhaps the most hard pressed to meet the demand of its 2 million residents and 40 million visitors annually.

Despite its conspicuous consumption, from the tumbling fountains of the Bellagio to the Venetian’s canals, since 2002, Las Vegas has been obliged to cut its water use by a third, from 314 gallons per capita a day to 212. The region recycles around half of its wastewater which is piped back into Lake Mead, after cleaning and treatment. Residents are allowed to water their gardens no more than one day a week in peak season, and there are stiff fines for noncompliance.

The Third Straw

Historically, two intake pipes carried water from Lake Mead to Las Vegas, about 25 miles to the west. In 2012, realizing that the highest of these, at 1050 feet, would soon be sucking air, the Southern Nevada Water Authority began construction of a new pipeline. Known as the Third Straw, Intake No. 3 reaches 200 feet deeper into the lake—to keep water flowing for as long as there’s water to pump. The new pipeline, which commenced operations in 2015, doesn’t draw more water from the lake than before, or make the surface level drop any faster. But it will keep taps flowing in Las Vegas homes and casinos even if drought-stricken Lake Mead drops to its lowest levels.

Modeling Water Levels in Lake Mead

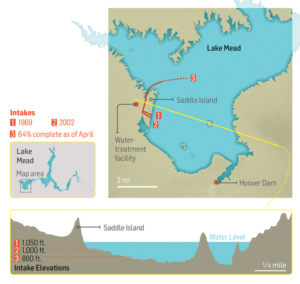

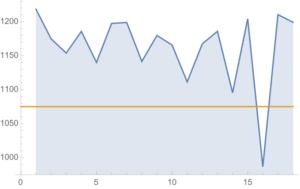

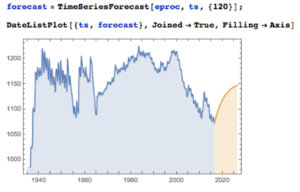

The monthly reported water levels in Lake Mead from Feb 1935 to June 2016 are shown in the chart below. The reference line is the drought level, historically defined as 1,125 feet.

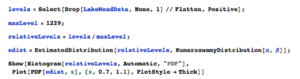

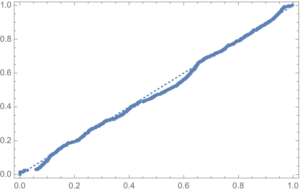

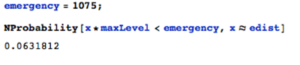

One statistical technique widely applied in hydrology involves fitting a Kumaraswamy distribution to the relative water level. According to the Arizona Game and Fish Department, the maximum lake level is 1229 feet. We model the water level relative to the maximum level, as follows.

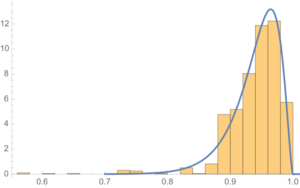

The fit of the distribution appears quite good, even in the tails:

ProbabilityPlot[relativeLevels, edist]

Since water levels have been below the drought level for some time, let’s instead consider the “emergency” level, 1,075 feet. According to this model, there is just over a 6% chance of Lake Mead hitting the emergency level and, consequently, a high probability of breaching the emergency threshold some time over before the end of 2017.

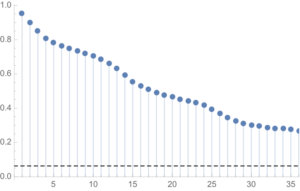

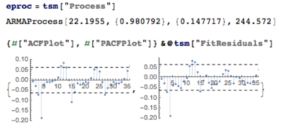

One problem with this approach is that it assumes that each observation is drawn independently from a random variable with the estimated distribution. In reality, there are high levels of autocorrelation in the series, as might be expected: lower levels last month typically increase the likelihood of lower levels this month. The chart of the autocorrelation coefficients makes this pattern clear, with statistically significant coefficients at lags of up to 36 months.

ts[“ACFPlot”]

An alternative methodology that enables us to take account of the autocorrelation in the process is time series analysis. We proceed to fit an autoregressive moving average (ARMA) model as follows:

tsm = TimeSeriesModelFit[ts, “ARMA”]

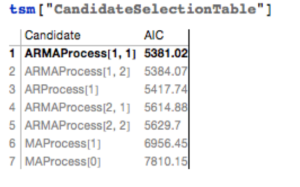

The best fitting model in an ARMA(1,1) model, according to the AIC criterion:

Applying the fitted ARMA model, we forecast the water level in Lake Mead over the next ten years as shown in the chart below. Given the mean-reverting moving average component of the model, it is not surprising to see the model forecasting a return to normal levels.

There is some evidence of lack of fit in the ARMA model, as shown in the autocorrelations of the model residuals:

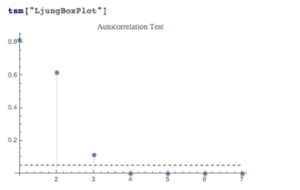

A formal test reveals that residual autocorrelations at lags 4 and higher are jointly statistically significant:

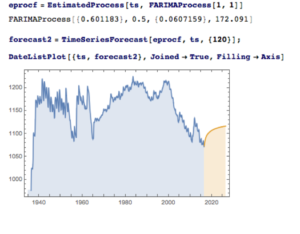

The slowly decaying pattern of autocorrelations in the water level series suggests a possible “long memory” effect, which can be better modelled as a fractionally integrated process. The forecasts from such a model, like the ARMA model forecasts, display a tendency to revert to a long term mean; but the reversion process is dampened by the reinforcing, long-memory effect captured in the FARIMA model.

The Prospects for the Next Decade

Taking the view that the water level in Lake Mead forms a stationary statistical process, the likelihood is that water levels will rise to 1,125 feet or more over the next ten years, easing the current water shortage in the region.

On the other hand, there are good reasons to believe that there are exogenous (deterministic) factors in play, specifically the over-consumption of water at a rate greater than the replenishment rate from average rainfall levels. Added to this, plausible studies suggest that average rainfall in the Colorado Basin is expected to decline over the next fifty years. Under this scenario, the water level in Lake Mead will likely continue to deteriorate, unless more stringent measures are introduced to regulate consumption.

Economic Impact

The drought in the South West affects far more than just the water levels in Lake Mead, of course. One study found that California’s agriculture sector alone had lost $2.2Bn and some 17,00 season and part time jobs in 2014, due to drought. Agriculture uses more than 80% of the State’s water, according to Fortune magazine, which goes on to identify the key industries most affected, including agriculture, food processing, semiconductors, energy, utilities and tourism.

In the energy sector, for example, the loss of hydroelectric power cost CA around $1.4Bn in 2014, according to non-profit research group Pacific Institute. Although Intel pulled its last fabrication plant from California in 2009, semiconductor manufacturing is still a going concern in the state. Maxim Integrated, TowerJazz, and TSI Semiconductors all still have fabrication plants in the state. And they need a lot of water. A single semiconductor fabrication plant can use as much water as a small city. That means the current plants could represent three cities worth of consumption.

The drought is also bad news for water utilities, of course. The need to conserve water raises the priority on repair and maintenance, and that means higher costs and lower profit. Complicating the problem, California lacks any kind of management system for its water supply and can’t measure the inflows and outflows to ground water levels at any particular time.

The Bureau of Reclamation has studied more than two dozen options for conserving and increasing water supply, including importation, desalination and reuse. While some were disregarded for being too costly or difficult, the bureau found that the remaining options, if instituted, could yield 3.7 million acre feet per year in savings and new supplies, increasing to 7 million acre feet per year by 2060. Agriculture is the biggest user by far and has to be part of any solution. In the near term, the agriculture industry could reduce its use by 10 to 15 percent without changing the types of crops it grows by using new technology, such as using drip irrigation instead of flood irrigation and monitoring soil moisture to prevent overwatering, the Pacific Institute found.

Conclusion

We can anticipate that a series of short term fixes, like the “Third Straw”, will be employed to kick the can down the road as far as possible, but research now appears almost unanimous in finding that drought is having a deleterious, long term affect on the economics of the South Western states. Agriculture is likely to have to bear the brunt of the impact, but so too will adverse consequences be felt in industries as disparate as food processing, semiconductors and utilities. California, with the largest agricultural industry, by far, is likely to be hardest hit. The Las Vegas region may be far less vulnerable, having already taken aggressive steps to conserve and reuse water supply and charge economic rents for water usage.

Modeling Water Levels in Lake Mead

The monthly reported water levels in Lake Mead from Feb 1935 to June 2016 are shown in the chart below. The reference line is the drought level, historically defined as 1,125 feet.

One statistical technique widely applied in hydrology involves fitting a Kumaraswamy distribution to the relative water level. According to the Arizona Game and Fish Department, the maximum lake level is 1229 feet. We model the water level relative to the maximum level, as follows.

The fit of the distribution appears quite good, even in the tails:

ProbabilityPlot[relativeLevels, edist]

Since water levels have been below the drought level for some time, let’s instead consider the “emergency” level, 1,075 feet. According to this model, there is just over a 6% chance of Lake Mead hitting the emergency level and, consequently, a high probability of breaching the emergency threshold some time over before the end of 2017.

One problem with this approach is that it assumes that each observation is drawn independently from a random variable with the estimated distribution. In reality, there are high levels of autocorrelation in the series, as might be expected: lower levels last month typically increase the likelihood of lower levels this month. The chart of the autocorrelation coefficients makes this pattern clear, with statistically significant coefficients at lags of up to 36 months.

ts[“ACFPlot”]

An alternative methodology that enables us to take account of the autocorrelation in the process is time series analysis. We proceed to fit an autoregressive moving average (ARMA) model as follows:

tsm = TimeSeriesModelFit[ts, “ARMA”]

The best fitting model in an ARMA(1,1) model, according to the AIC criterion:

Applying the fitted ARMA model, we forecast the water level in Lake Mead over the next ten years as shown in the chart below. Given the mean-reverting moving average component of the model, it is not surprising to see the model forecasting a return to normal levels.

There is some evidence of lack of fit in the ARMA model, as shown in the autocorrelations of the model residuals:

A formal test reveals that residual autocorrelations at lags 4 and higher are jointly statistically significant:

The slowly decaying pattern of autocorrelations in the water level series suggests a possible “long memory” effect, which can be better modelled as a fractionally integrated process. The forecasts from such a model, like the ARMA model forecasts, display a tendency to revert to a long term mean; but the reversion process is dampened by the reinforcing, long-memory effect captured in the FARIMA model.

The Prospects for the Next Decade

Taking the view that the water level in Lake Mead forms a stationary statistical process, the likelihood is that water levels will rise to 1,125 feet or more over the next ten years, easing the current water shortage in the region.

On the other hand, there are good reasons to believe that there are exogenous (deterministic) factors in play, specifically the over-consumption of water at a rate greater than the replenishment rate from average rainfall levels. Added to this, plausible studies suggest that average rainfall in the Colorado Basin is expected to decline over the next fifty years. Under this scenario, the water level in Lake Mead will likely continue to deteriorate, unless more stringent measures are introduced to regulate consumption.

Economic Impact

The drought in the South West affects far more than just the water levels in Lake Mead, of course. One study found that California’s agriculture sector alone had lost $2.2Bn and some 17,00 season and part time jobs in 2014, due to drought. Agriculture uses more than 80% of the State’s water, according to Fortune magazine, which goes on to identify the key industries most affected, including agriculture, food processing, semiconductors, energy, utilities and tourism.

In the energy sector, for example, the loss of hydroelectric power cost CA around $1.4Bn in 2014, according to non-profit research group Pacific Institute.

Although Intel pulled its last fabrication plant from California in 2009, semiconductor manufacturing is still a going concern in the state. Maxim Integrated, TowerJazz, and TSI Semiconductors all still have fabrication plants in the state. And they need a lot of water. A single semiconductor fabrication plant can use as much water as a small city. That means the current plants could represent three cities worth of consumption.

The drought is also bad news for water utilities, of course. The need to conserve water raises the priority on repair and maintenance, and that means higher costs and lower profit. Complicating the problem, California lacks any kind of management system for its water supply and can’t measure the inflows and outflows to ground water levels at any particular time.

The Bureau of Reclamation has studied more than two dozen options for conserving and increasing water supply, including importation, desalination and reuse. While some were disregarded for being too costly or difficult, the bureau found that the remaining options, if instituted, could yield 3.7 million acre feet per year in savings and new supplies, increasing to 7 million acre feet per year by 2060. Agriculture is the biggest user by far and has to be part of any solution. In the near term, the agriculture industry could reduce its use by 10 to 15 percent without changing the types of crops it grows by using new technology, such as using drip irrigation instead of flood irrigation and monitoring soil moisture to prevent overwatering, the Pacific Institute found.

Conclusion

We can anticipate that a series of short term fixes, like the “Third Straw”, will be employed to kick the can down the road as far as possible, but research now appears almost unanimous in finding that drought is having a deleterious, long term affect on the economics of the South Western states. Agriculture is likely to have to bear the brunt of the impact, but so too will adverse consequences be felt in industries as disparate as food processing, semiconductors and utilities. California, with the largest agricultural industry, by far, is likely to be hardest hit. The Las Vegas region may be far less vulnerable, having already taken aggressive steps to conserve and reuse water supply and charge economic rents for water usage.

The Amazon Killer

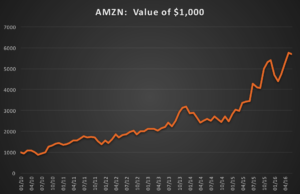

Amazon (NASDAQ:AMZN) has been on a tear over the last decade, especially since the financial crisis of 2008. If you had been smart (or lucky) enough to buy the stock at the beginning of 2010, each $1,000 you invested would now be worth over $5,700, giving a CAGR of over 31%.

Source: Yahoo! Finance

It’s hard to argue with success, but could you have done better? The answer, surprisingly, is yes – and by a wide margin.

Introducing AMZN+

I am going to reveal this mystery stock in due course and, I promise you, the investment recommendation is fully actionable. For now, let’s just refer to it as AMZN+.

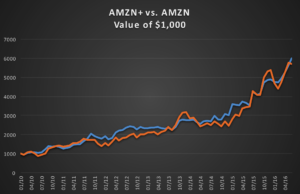

A comparison between investments made in AMZN and AMZN+ over the period from January 2010 to June 2016 is shown the chart following.

Visually, there doesn’t appear to be much of a difference in overall performance. However, it is apparent that AMZN+ is a great deal less volatile than its counterpart.

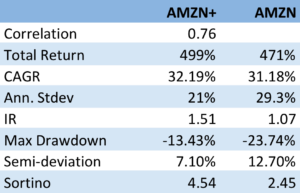

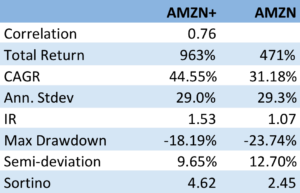

The table below give a more telling account of the relative outperformance by AMZN+.

The two investments are very highly correlated, with AMZN+ producing an extra 1% per annum in CAGR over the 6 ½ year period.

The telling distinction, however, lies on the risk side of the equation. Here AMZN+ outperforms AMZN by a wide margin, with an annual standard deviation of only 21% compared to 29%. What this means is that AMZN+ produces almost a 50% higher return than AMZN per unit of risk (information ratio 1.51 vs. 1.07).

The more conservative risk profile of AMZN+ is also reflected in lower maximum drawdown (-13.43% vs -23.74%), semi-deviation and higher Sortino Ratio (see this article for an explanation of these terms).

Bottom line: you can produce around the same rate of return with substantially less risk by investing in AMZN+, rather than AMZN.

Comparing Equally Risky Investments

There is another way to make the comparison, which some investors might find more appealing. Risk is, after all, an altogether more esoteric subject than return, which every investor understands.

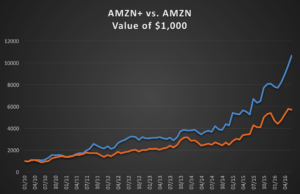

So let’s say the investor adopts the same risk budget as for his original investment in AMZN, i.e. an annual volatility of just over 29%. We can produce the same overall level of risk in AMZN+, equalizing the riskiness of the two investments, simply by leveraging the investment in AMZN+ by a factor of 1.36, using margin money. i.e. we borrow $360 and invest a total of $1,360 in AMZN+, for each $1,000 we would have invested in AMZN. Look at the difference in performance:

The investor’s total return in AMZN+ would have been 963%, almost double the return in AMZN over the same period and with a CAGR of over 44.5%, more than 13% per annum higher than AMZN.

Note that, despite having an almost identical annual standard deviation, AMZN+ still enjoys a lower maximum drawdown and downside risk than AMZN.

The Big Reveal

Ok, so what is this mystery stock, AMZN+? Actually it isn’t a stock: it’s a simple portfolio, rebalanced monthly, with 66% of the investment being made in AMZN and 34% in the Direxion Daily 20+ Yr Trsy Bull 3X ETF (NYSEArca: TMF).

Well, that’s a little bit of a cheat, although not much of one: it isn’t too much of a challenge to put $667 of every $1,000 in AMZN and the remaining $333 in TMF, rebalancing the portfolio at the end of every month.

The next question an investor might want to ask is: what other stocks could I apply this approach to? The answer is: a great many of them. And where you end up, ultimately, is with the discovery that you can eliminate a great deal of unnecessary risk with a portfolio of around 20-30 well-chosen assets.

The Fundamental Lesson from Portfolio Theory

We saw that AMZN incurred a risk of 29% in annual standard deviation, compared to only 21% for the AMZN+ portfolio. What does the investor gain by taking that extra 8% in annual risk? Nothing at all – in fact he would have achieved a slightly worse return.

The key take-away from this simple example is the fundamental law of modern portfolio theory:

The market will not compensate an investor for taking diversifiable risk

As they say, diversification is the only free lunch on Wall Street. So make the most of it.

Crash-Proof Investing

As markets continue to make new highs against a backdrop of ever diminishing participation and trading volume, investors have legitimate reasons for being concerned about prospects for the remainder of 2016 and beyond, even without consideration to the myriad of economic and geopolitical risks that now confront the US and global economies. Against that backdrop, remaining fully invested is a test of nerves for those whose instinct is that they may be picking up pennies in front an oncoming steamroller. On the other hand, there is a sense of frustration in cashing out, only to watch markets surge another several hundred points to new highs.

In this article I am going to outline some steps investors can take to match their investment portfolios to suit current market conditions in a way that allows them to remain fully invested, while safeguarding against downside risk. In what follows I will be using our own Strategic Volatility Strategy, which invests in volatility ETFs such as the iPath S&P 500 VIX ST Futures ETN (NYSEArca:VXX) and the VelocityShares Daily Inverse VIX ST ETN (NYSEArca:XIV), as an illustrative example, although the principles are no less valid for portfolios comprising other ETFs or equities.

Risk and Volatility

Risk may be defined as the uncertainty of outcome and the most common way of assessing it in the context of investment theory is by means of the standard deviation of returns. One difficulty here is that one may never ascertain the true rate of volatility – the second moment – of a returns process; one can only estimate it. Hence, while one can be certain what the closing price of a stock was at yesterday’s market close, one cannot say what the volatility of the stock was over the preceding week – it cannot be observed the way that a stock price can, only estimated. The most common estimator of asset volatility is, of course, the sample standard deviation. But there are many others that are arguably superior: Log-Range, Parkinson, Garman-Klass to name but a few (a starting point for those interested in such theoretical matters is a research paper entitled Estimating Historical Volatility, Brandt & Kinlay, 2005).

Leaving questions of estimation to one side, one issue with using standard deviation as a measure of risk is that it treats upside and downside risk equally – the “risk” that you might double your money in an investment is regarded no differently than the risk that you might see your investment capital cut in half. This is not, of course, how investors tend to look at things: they typically allocate a far higher cost to downside risk, compared to upside risk.

One way to address the issue is by using a measure of risk known as the semi-deviation. This is estimated in exactly the same way as the standard deviation, except that it is applied only to negative returns. In other words, it seeks to isolate the downside risk alone.

This leads directly to a measure of performance known as the Sortino Ratio. Like the more traditional Sharpe Ratio, the Sortino Ratio is a measure of risk-adjusted performance – the average return produced by an investment per unit of risk. But, whereas the Sharpe Ratio uses the standard deviation as the measure of risk, for the Sortino Ratio we use the semi-deviation. In other words, we are measuring the expected return per unit of downside risk.

There may be a great deal of variation in the upside returns of a strategy that would penalize the risk-adjusted returns, as measured by its Sharpe Ratio. But using the Sortino Ratio, we ignore the upside volatility entirely and focus exclusively on the volatility of negative returns (technically, the returns falling below a given threshold, such as the risk-free rate. Here we are using zero as our benchmark). This is, arguably, closer to the way most investors tend to think about their investment risk and return preferences.

In a scenario where, as an investor, you are particularly concerned about downside risk, it makes sense to focus on downside risk. It follows that, rather than aiming to maximize the Sharpe Ratio of your investment portfolio, you might do better to focus on the Sortino Ratio.

Factor Risk and Correlation Risk

Another type of market risk that is often present in an investment portfolio is correlation risk. This is the risk that your investment portfolio correlates to some other asset or investment index. Such risks are often occluded – hidden from view – only to emerge when least wanted. For example, it might be supposed that a “dollar-neutral” portfolio, i.e. a portfolio comprising equity long and short positions of equal dollar value, might be uncorrelated with the broad equity market indices. It might well be. On the other hand, the portfolio might become correlated with such indices during times of market turbulence; or it might correlate positively with some sector indices and negatively with others; or with market volatility, as measured by the CBOE VIX index, for instance.

Where such dependencies are included by design, they are not a problem; but when they are unintended and latent in the investment portfolio, they often create difficulties. The key here is to test for such dependencies against a variety of risk factors that are likely to be of concern. These might include currency and interest rate risk factors, for example; sector indices; or commodity risk factors such as oil or gold (in a situation where, for example, you are investing a a portfolio of mining stocks). Once an unwanted correlation is identified, the next step is to adjust the portfolio holdings to try to eliminate it. Typically, this can often only be done in the average, meaning that, while there is no correlation bias over the long term, there may be periods of positive, negative, or alternating correlation over shorter time horizons. Either way, it’s important to know.

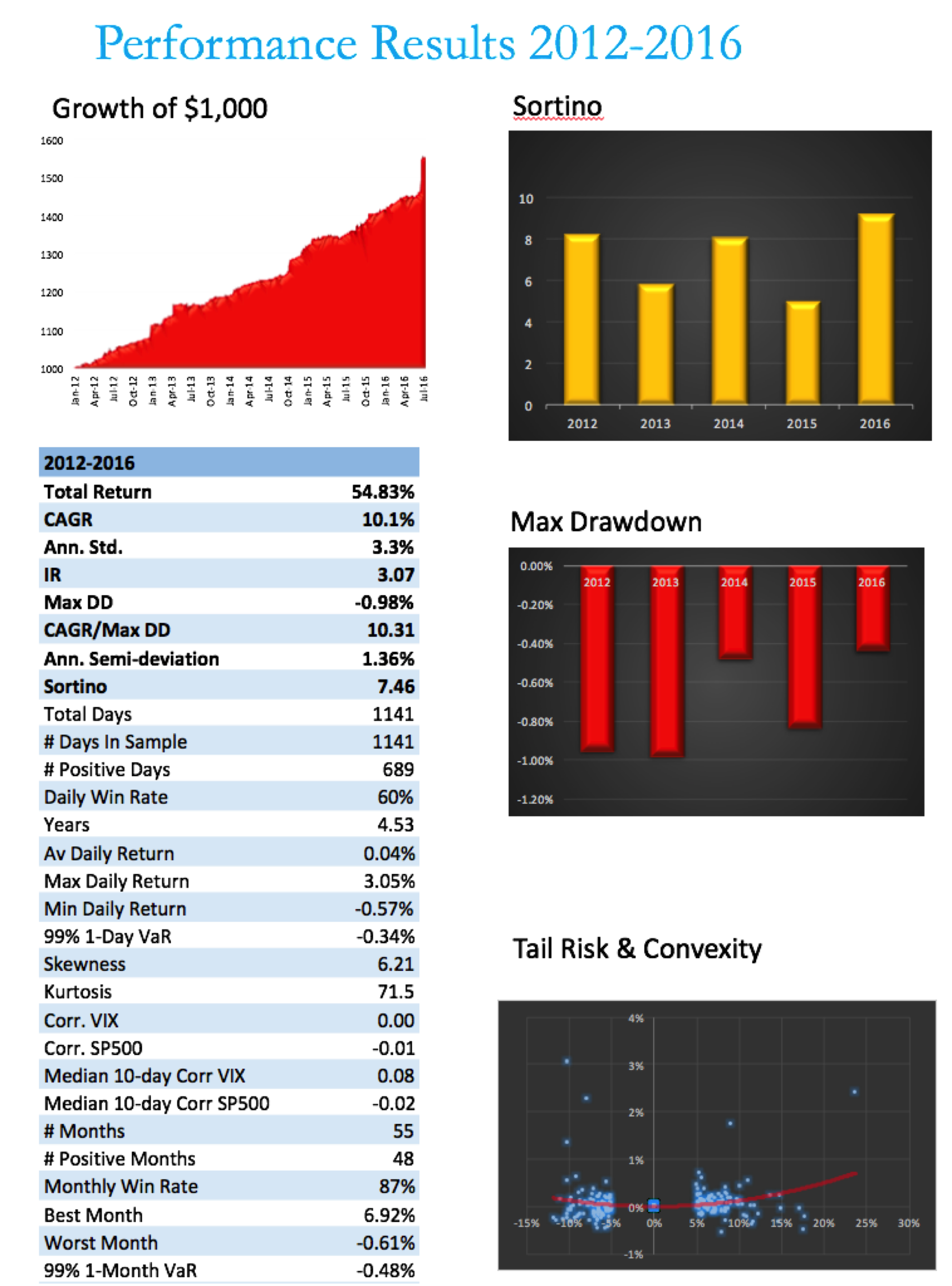

Using the Strategic Volatility Strategy as an example, we target to maximize the Sortino Ratio, subject also to maintaining very lows levels of correlation to the principal risk factors of concern to us, the S&P 500 and VIX indices. Our aim is to create a portfolio that is broadly impervious to changes in the level of the overall market, or in the level of market volatility.

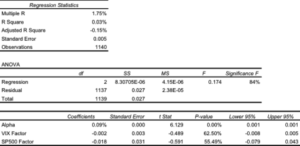

One method of quantifying such dependencies is with linear regression analysis. By way of illustration, in the table below are shown the results of regressing the daily returns from the Strategic Volatility Strategy against the returns in the VIX and S&P 500 indices. Both factor coefficients are statistically indistinguishable from zero, i.e. there is significant no (linear) dependency. However, the constant coefficient, referred to as the strategy alpha, is both positive and statistically significant. In simple terms, the strategy produces a return that is consistently positive, on average, and which is not dependent on changes in the level of the broad market, or its volatility. By contrast, for example, a commonplace volatility strategy that entails capturing the VIX futures roll would show a negative correlation to the VIX index and a positive dependency on the S&P500 index.

Tail Risk

Ever since the publication of Nassim Taleb’s “The Black Swan”, investors have taken a much greater interest in the risk of extreme events. If the bursting of the tech bubble in 2000 was not painful enough, investors surely appear to have learned the lesson thoroughly after the financial crisis of 2008. But even if investors understand the concept, the question remains: what can one do about it?

The place to start is by looking at the fundamental characteristics of the portfolio returns. Here we are not such much concerned with risk, as measured by the second moment, the standard deviation. Instead, we now want to consider the third and forth moments of the distribution, the skewness and kurtosis.

Comparing the two distributions below, we can see that the distribution on the left, with negative skew, has nonzero probability associated with events in the extreme left of the distribution, which in this context, we would associate with negative returns. The distribution on the right, with positive skew, is likewise “heavy-tailed”; but in this case the tail “risk” is associated with large, positive returns. That’s the kind of risk most investors can live with.

Source: Wikipedia

A more direct measure of tail risk is kurtosis, literally, “heavy tailed-ness”, indicating a propensity for extreme events to occur. Again, the shape of the distribution matters: a heavy tail in the right hand portion of the distribution is fine; a heavy tail on the left (indicating the likelihood of large, negative returns) is a no-no.

Let’s take a look at the distribution of returns for the Strategic Volatility Strategy. As you can see, the distribution is very positively skewed, with a very heavy right hand tail. In other words, the strategy has a tendency to produce extremely positive returns. That’s the kind of tail risk investors prefer.

Another way to evaluate tail risk is to examine directly the performance of the strategy during extreme market conditions, when the market makes a major move up or down. Since we are using a volatility strategy as an example, let’s take a look at how it performs on days when the VIX index moves up or down by more than 5%. As you can see from the chart below, by and large the strategy returns on such days tend to be positive and, furthermore, occasionally the strategy produces exceptionally high returns.

The property of producing higher returns to the upside and lower losses to the downside (or, in this case, a tendency to produce positive returns in major market moves in either direction) is known as positive convexity.

Positive convexity, more typically found in fixed income portfolios, is a highly desirable feature, of course. How can it be achieved? Those familiar with options will recognize the convexity feature as being similar to the concept of option Gamma and indeed, one way to produce such a payoff is buy adding options to the investment mix: put options to give positive convexity to the downside, call options to provide positive convexity to the upside (or using a combination of both, i.e. a straddle).

In this case we achieve positive convexity, not by incorporating options, but through a judicious choice of leveraged ETFs, both equity and volatility, for example, the ProShares UltraPro S&P500 ETF (NYSEArca:UPRO) and the ProShares Ultra VIX Short-Term Futures ETN (NYSEArca:UVXY).

Putting It All Together

While we have talked through the various concepts in creating a risk-protected portfolio one-at-a-time, in practice we use nonlinear optimization techniques to construct a portfolio that incorporates all of the desired characteristics simultaneously. This can be a lengthy and tedious procedure, involving lots of trial and error. And it cannot be emphasized enough how important the choice of the investment universe is from the outset. In this case, for instance, it would likely be pointless to target an overall positively convex portfolio without including one or more leveraged ETFs in the investment mix.

Let’s see how it turned out in the case of the Strategic Volatility Strategy.

Note that, while the portfolio Information Ratio is moderate (just above 3), the Sortino Ratio is consistently very high, averaging in excess of 7. In large part that is due to the exceptionally low downside risk, which at 1.36% is less than half the standard deviation (which is itself quite low at 3.3%). It is no surprise that the maximum drawdown over the period from 2012 amounts to less than 1%.

A critic might argue that a CAGR of only 10% is rather modest, especially since market conditions have generally been so benign. I would answer that criticism in two ways. Firstly, this is an investment that has the risk characteristics of a low-duration government bond; and yet it produces a yield many times that of a typical bond in the current low interest rate environment.

Secondly, I would point out that these results are based on use of standard 2:1 Reg-T leverage. In practice it is entirely feasible to increase the leverage up to 4:1, which would produce a CAGR of around 20%. Investors can choose where on the spectrum of risk-return they wish to locate the portfolio and the strategy leverage can be adjusted accordingly.

Conclusion

The current investment environment, characterized by low yields and growing downside risk, poses difficult challenges for investors. A way to address these concerns is to focus on metrics of downside risk in the construction of the investment portfolio, aiming for high Sortino Ratios, low correlation with market risk factors, and positive skewness and convexity in the portfolio returns process.

Such desirable characteristics can be achieved with modern portfolio construction techniques providing the investment universe is chosen carefully and need not include anything more exotic than a collection of commonplace ETF products.

Developing A Volatility Carry Strategy

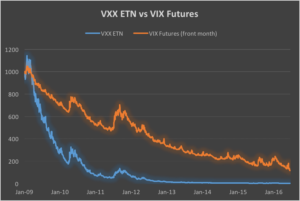

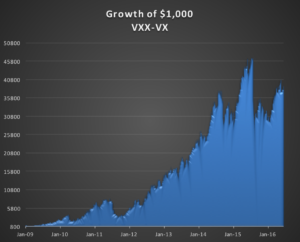

By way of introduction we begin by reviewing a well known characteristic of the iPath S&P 500 VIX ST Futures ETN (NYSEArca:VXX). In common with other long-volatility ETF /ETNs, VXX has a tendency to decline in value due to the upward sloping shape of the forward volatility curve. The chart below which illustrates the fall in value of the VXX, together with the front-month VIX futures contract, over the period from 2009.

This phenomenon gives rise to opportunities for “carry” strategies, wherein a long volatility product such as VXX is sold in expectation that it will decline in value over time. Such strategies work well during periods when volatility futures are in contango, i.e. when the longer dated futures contracts have higher prices than shorter dated futures contracts and the spot VIX Index, which is typically the case around 70% of the time. An analogous strategy in the fixed income world is known as “riding down the yield curve”. When yield curves are upward sloping, a fixed income investor can buy a higher-yielding bill or bond in the expectation that the yield will decline, and the price rise, as the security approaches maturity. Quantitative easing put paid to that widely utilized technique, but analogous strategies in currency and volatility markets continue to perform well.

The challenge for any carry strategy is what happens when the curve inverts, as futures move into backwardation, often giving rise to precipitous losses. A variety of hedging schemes have been devised that are designed to mitigate the risk. For example, one well-known carry strategy in VIX futures entails selling the front month contract and hedging with a short position in an appropriate number of E-Mini S&P 500 futures contracts. In this case the hedge is imperfect, leaving the investor the task of managing a significant basis risk.

The chart of the compounded value of the VXX and VIX futures contract suggests another approach. While both securities decline in value over time, the fall in the value of the VXX ETN is substantially greater than that of the front month futures contract. The basic idea, therefore, is a relative value trade, in which we purchase VIX futures, the better performing of the pair, while selling the underperforming VXX. Since the value of the VXX is determined by the value of the front two months VIX futures contracts, the hedge, while imperfect, is likely to entail less basis risk than is the case for the VIX-ES futures strategy.

Another way to think about the trade is this: by combining a short position in VXX with a long position in the front-month futures, we are in effect creating a residual exposure in the value of the second month VIX futures contract relative to the first. So this is a strategy in which we are looking to capture volatility carry, not at the front of the curve, but between the first and second month futures maturities. We are, in effect, riding down the belly of volatility curve.

The Relationship between VXX and VIX Futures

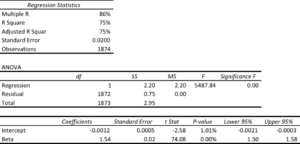

Let’s take a look at the relationship between the VXX and front month futures contract, which I will hereafter refer to simply as VX. A simple linear regression analysis of VXX against VX is summarized in the tables below, and confirms two features of their relationship.

Firstly there is a strong, statistically significant relationship between the two (with an R-square of 75% ) – indeed, given that the value of the VXX is in part determined by VX, how could there not be?

Secondly, the intercept of the regression is negative and statistically significant. We can therefore conclude that the underperformance of the VXX relative to the VX is not just a matter of optics, but is a statistically reliable phenomenon. So the basic idea of selling the VXX against VX is sound, at least in the statistical sense.

Constructing the Initial Portfolio

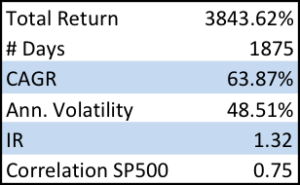

In constructing our theoretical portfolio, I am going to gloss over some important technical issues about how to construct the optimal hedge and simply assert that the best one can do is apply a beta of around 1.2, to produce the following outcome:

While broadly positive, with an information ratio of 1.32, the strategy performance is a little discouraging, on several levels. Firstly, the annual volatility, at over 48%, is uncomfortably high. Secondly, the strategy experiences very substantial drawdowns at times when the volatility curve inverts, such as in August 2015 and January 2016. Finally, the strategy is very highly correlated with the S&P500 index, which may be an important consideration for investors looking for ways to diversity their stock portfolio risk.

Exploiting Calendar Effects

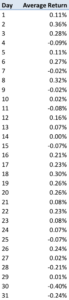

We will address these issues in short order. Firstly, however, I want to draw attention to an interesting calendar effect in the strategy (using a simple pivot table analysis).

As you can see from the table above, the strategy returns in the last few days of the calendar month tend to be significantly below zero.

The cause of the phenomenon has to do with the way the VXX is constructed, but the important point here is that, in principle, we can utilize this effect to our advantage, by reversing the portfolio holdings around the end of the month. This simple technique produces a significant improvement in strategy returns, while lowering the correlation:

Reducing Portfolio Risk and Correlation

We can now address the issue of the residual high level of strategy volatility, while simultaneously reducing the strategy correlation to a much lower level. We can do this in a straightforward way by adding a third asset, the SPDR S&P 500 ETF Trust (NYSEArca:SPY), in which we will hold a short position, to exploit the negative correlation of the original portfolio.

We then adjust the portfolio weights to maximize the risk-adjusted returns, subject to limits on the maximum portfolio volatility and correlation. For example, setting a limit of 10% for both volatility and correlation, we achieve the following result (with weights -0.37 0.27 -0.65 for VXX, VX and SPY respectively):

Compared to the original portfolio, the new portfolio’s performance is much more benign during the critical period from Q2-2015 to Q1-2016 and while there remain several significant drawdown periods, notably in 2011, overall the strategy is now approaching an investable proposition, with an information ratio of 1.6 and annual volatility of 9.96% and correlation of 0.1.

Other configurations are possible, of course, and the risk-adjusted performance can be improved, depending on the investor’s risk preferences.

Portfolio Rebalancing

There is an element of curve-fitting in the research process as described so far, in as much as we are using all of the available data to July 2016 to construct a portfolio with the desired characteristics. In practice, of course, we will be required to rebalance the portfolio on a periodic basis, re-estimating the optimal portfolio weights as new data comes in. By way of illustration, the portfolio was re-estimated using in-sample data to the end of Feb, 2016, producing out-of-sample results during the period from March to July 2016, as follows:

A detailed examination of the generic problem of how frequently to rebalance the portfolio is beyond the scope of this article and I leave it to interested analysts to perform the research for themselves.

Practical Considerations

In order to implement the theoretical strategy described above there are several important practical steps that need to be considered.

- It is not immediately apparent how the weights should be applied to a portfolio comprising both ETNs and futures. In practice the best approach is to re-estimate the portfolio using a regression relationship expressed in $-value terms, rather than in percentages, in order to establish the quantity of VXX and SPY stock to be sold per single VX futures contract.

- Reversing the portfolio holdings in the last few days of the month will add significantly to transaction costs, especially for the position in VX futures, for which the minimum tick size is $50. It is important to factor realistic estimates of transaction costs into the assessment of the strategy performance overall and specifically with respect to month-end reversals.

- The strategy assumed the availability of VXX and SPY to short, which occasionally can be a problem. It’s not such a big deal if you are maintaining a long-term short position, but flipping the position around over a few ays at the end of the month might be problematic, from time to time.

- Also, we should take account of stock loan financing costs, which run to around 2.9% and 0.42% annually for VXX and SPY, respectively. These rates can vary with market conditions and stock availability, of course.

- It is highly likely that other ETFs/ETNs could profitably be added to the mix in order to further reduce strategy volatility and improve risk-adjusted returns. Likely candidates could include, for example, the Direxion Daily 20+ Yr Trsy Bull 3X ETF (NYSEArca:TMF).

- We have already mentioned the important issue of portfolio rebalancing. There is an argument for rebalancing more frequently to take advantage of the latest market data; on the other hand, too-frequent changes in the portfolio composition can undermine portfolio robustness, increase volatility and incur higher transaction costs. The question of how frequently to rebalance the portfolio is an important one that requires further testing to determine the optimal rebalancing frequency.

Conclusion

We have described the process of constructing a volatility carry strategy based on the relative value of the VXX ETN vs the front-month contract in VIX futures. By combining a portfolio comprising short positions in VXX and SPY with a long position in VIX futures, the investor can, in principle achieve risk-adjusted returns corresponding to an information ratio of around 1.6, or more. It is thought likely that further improvements in portfolio performance can be achieved by adding other ETFs to the portfolio mix.