Quant Strategies – Performance Summary Sept. 2018

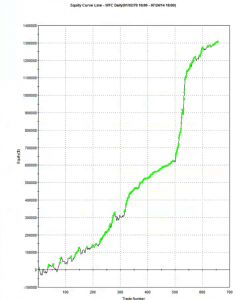

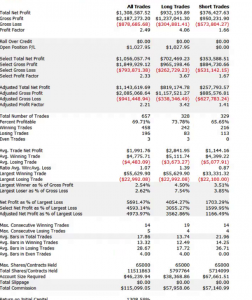

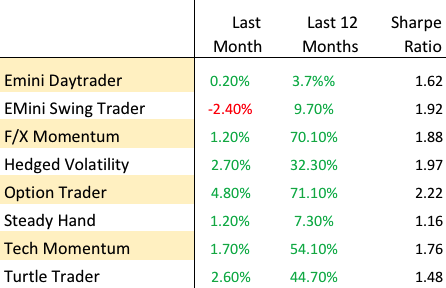

The end of Q3 seems like an appropriate time for an across-the-piste review of how systematic strategies are performing in 2018. I’m using the dozen or more strategies running on the Systematic Algotrading Platform as the basis for the performance review, although results will obviously vary according to the specifics of the strategy. All of the strategies are traded live and performance results are net of subscription fees, as well as slippage and brokerage commissions.

Volatility Strategies

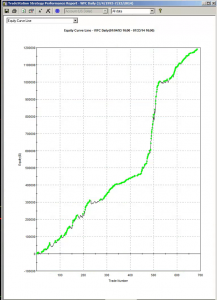

Those waiting for the hammer to fall on option premium collecting strategies will have been disappointed with the way things have turned out so far in 2018. Yes, February saw a long-awaited and rather spectacular explosion in volatility which completely destroyed several major volatility funds, including the VelocityShares Daily Inverse VIX Short-Term ETN (XIV) as well as Chicago-based hedged fund LJM Partners (“our goal is to preserve as much capital as possible”), that got caught on the wrong side of the popular VIX carry trade. But the lack of follow-through has given many volatility strategies time to recover. Indeed, some are positively thriving now that elevated levels in the VIX have finally lifted option premiums from the bargain basement levels they were languishing at prior to February’s carnage. The Option Trader strategy is a stand-out in this regard: not only did the strategy produce exceptional returns during the February melt-down (+27.1%), the strategy has continued to outperform as the year has progressed and YTD returns now total a little over 69%. Nor is the strategy itself exceptionally volatility: the Sharpe ratio has remained consistently above 2 over several years.

Hedged Volatility Trading

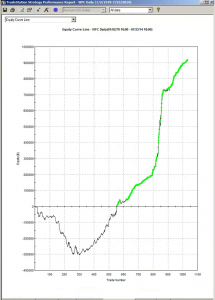

Investors’ chief concern with strategies that rely on collecting option premiums is that eventually they may blow up. For those looking for a more nuanced approach to managing tail risk the Hedged Volatility strategy may be the way to go. Like many strategies in the volatility space the strategy looks to generate alpha by trading VIX ETF products; but unlike the great majority of competitor offerings, this strategy also uses ETF options to hedge tail risk exposure. While hedging costs certainly acts as a performance drag, the results over the last few years have been compelling: a CAGR of 52% with a Sharpe Ratio close to 2.

F/X Strategies

One of the common concerns for investors is how to diversify their investment portfolios, especially since the great majority of assets (and strategies) tend to exhibit significant positive correlation to equity indices these days. One of the characteristics we most appreciate about F/X strategies in general and the F/X Momentum strategy in particular is that its correlation to the equity markets over the last several years has been negligible. Other attractive features of the strategy include the exceptionally high win rate – over 90% – and the profit factor of 5.4, which makes life very comfortable for investors. After a moderate performance in 2017, the strategy has rebounded this year and is up 56% YTD, with a CAGR of 64.5% and Sharpe Ratio of 1.89.

Equity Long/Short

Thanks to the Fed’s accommodative stance, equity markets have been generally benign over the last decade to the benefit of most equity long-only and long-short strategies, including our equity long/short Turtle Trader strategy , which is up 31% YTD. This follows a spectacular 2017 (+66%) , and is in line with the 5-year CAGR of 39%. Notably, the correlation with the benchmark S&P500 Index is relatively low (0.16), while the Sharpe Ratio is a respectable 1.47.

Equity ETFs – Market Timing/Swing Trading

One alternative to the traditional equity long/short products is the Tech Momentum strategy. This is a swing trading strategy that exploits short term momentum signals to trade the ProShares UltraPro QQQ (TQQQ) and ProShares UltraPro Short QQQ (SQQQ) leveraged ETFs. The strategy is enjoying a banner year, up 57% YTD, with a four-year CAGR of 47.7% and Sharpe Ratio of 1.77. A standout feature of this equity strategy is its almost zero correlation with the S&P 500 Index. It is worth noting that this strategy also performed very well during the market decline in Feb, recording a gain of over 11% for the month.

Futures Strategies

It’s a little early to assess the performance of the various futures strategies in the Systematic Strategies portfolio, which were launched on the platform only a few months ago (despite being traded live for far longer). For what it is worth, both of the S&P 500 E-Mini strategies, the Daytrader and the Swing Trader, are now firmly in positive territory for 2018. Obviously we are keeping a watchful eye to see if the performance going forward remains in line with past results, but our experience of trading these strategies gives us cause for optimism.

Conclusion: Quant Strategies in 2018

There appear to be ample opportunities for investors in the quant sector across a wide range of asset classes. For investors with equity market exposure, we particularly like strategies with low market correlation that offer significant diversification benefits, such as the F/X Momentum and F/X Momentum strategies. For those investors seeking the highest risk adjusted return, option selling strategies like the Option Trader strategy are the best choice, while for more cautious investors concerned about tail risk the Hedged Volatility strategy offers the security of downside protection. Finally, there are several new strategies in equities and futures coming down the pike, several of which are already showing considerable promise. We will review the performance of these newer strategies at the end of the year.

Go here for more information about the Systematic Algotrading Platform.