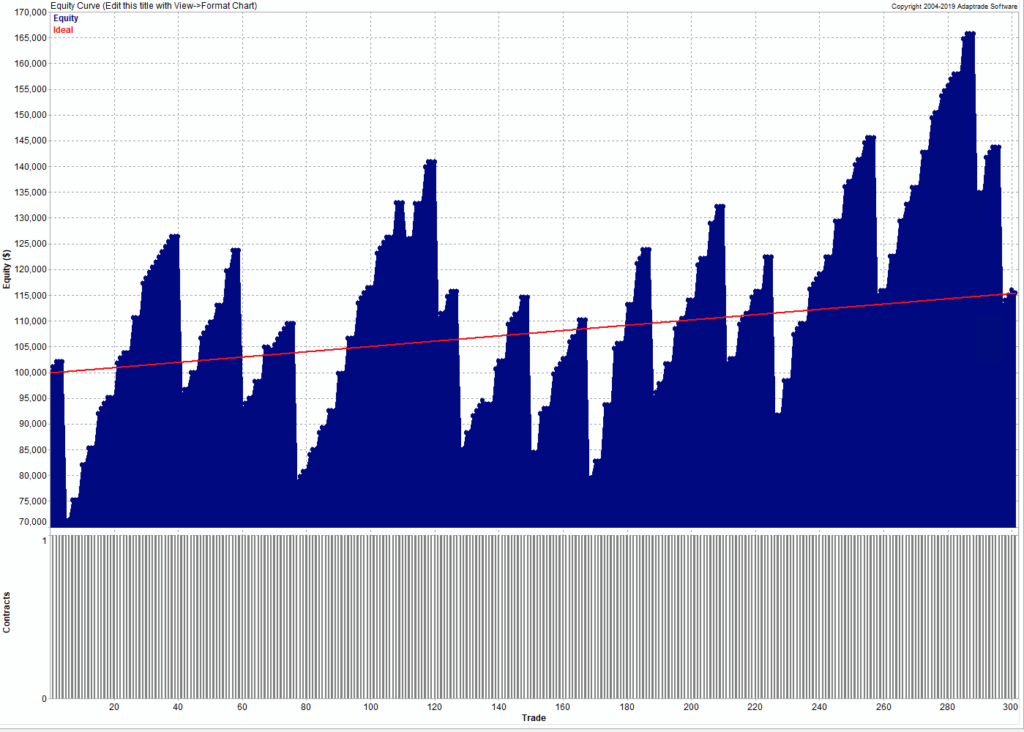

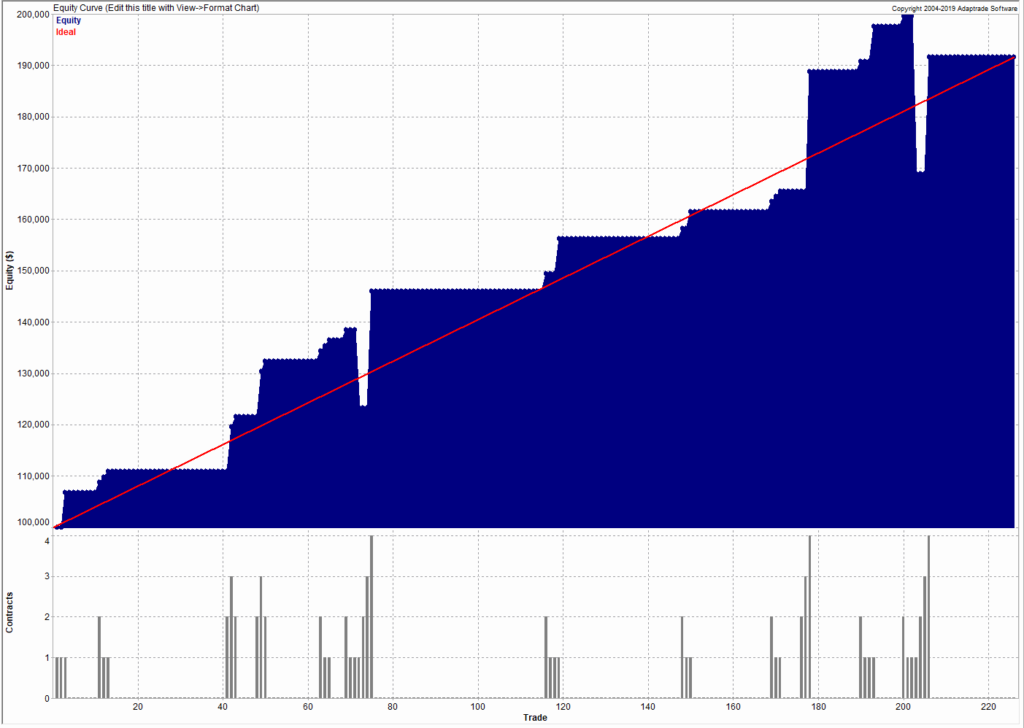

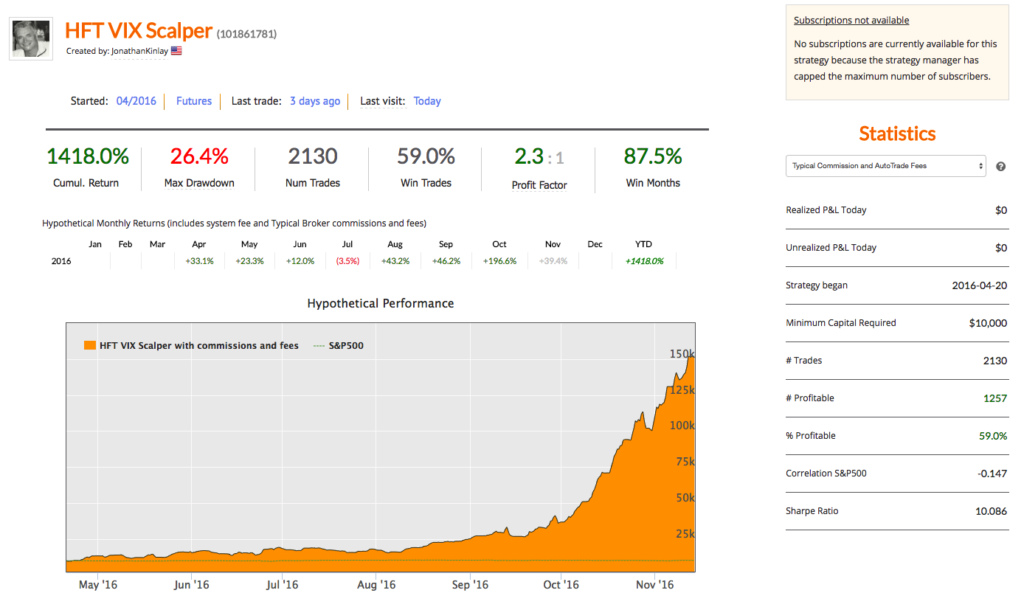

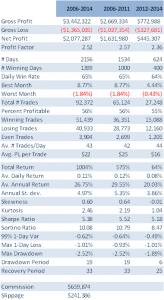

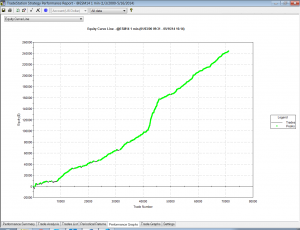

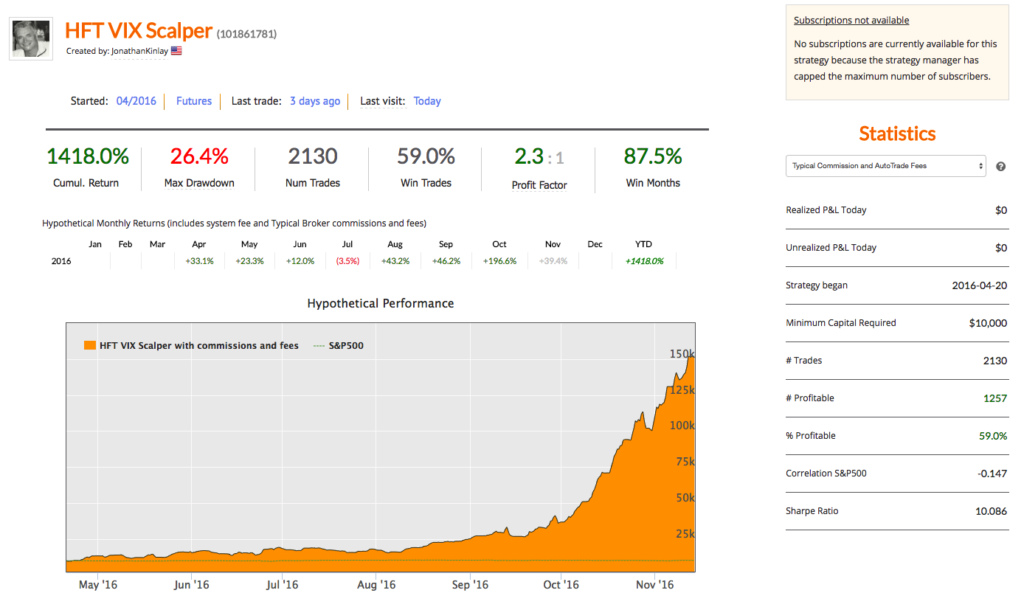

HFT scalping strategies enjoy several highly desirable characteristics, compared to low frequency strategies. A case in point is our scalping strategy in VIX futures, currently running on the Collective2 web site:

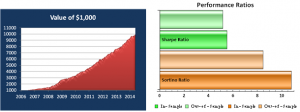

- The strategy is highly profitable, with a Sharpe Ratio in excess of 9 (net of transaction costs of $14 prt)

- Performance is consistent and reliable, being based on a large number of trades (10-20 per day)

- The strategy has low, or negative correlation to the underlying equity and volatility indices

- There is no overnight risk

Background on HFT Scalping Strategies

The attractiveness of such strategies is undeniable. So how does one go about developing them?

It is important for the reader to familiarize himself with some of the background to high frequency trading in general and scalping strategies in particular. Specifically, I would recommend reading the following blog posts:

http://jonathankinlay.com/2015/05/high-frequency-trading-strategies/

http://jonathankinlay.com/2014/05/the-mathematics-of-scalping/

Execution vs Alpha Generation in HFT Strategies

The key to understanding HFT strategies is that execution is everything. With low frequency strategies a great deal of work goes into researching sources of alpha, often using highly sophisticated mathematical and statistical techniques to identify and separate the alpha signal from the background noise. Strategy alpha accounts for perhaps as much as 80% of the total return in a low frequency strategy, with execution making up the remaining 20%. It is not that execution is unimportant, but there are only so many basis points one can earn (or save) in a strategy with monthly turnover. By contrast, a high frequency strategy is highly dependent on trade execution, which may account for 80% or more of the total return. The algorithms that generate the strategy alpha are often very simple and may provide only the smallest of edges. However, that very small edge, scaled up over thousands of trades, is sufficient to produce a significant return. And since the risk is spread over a large number of very small time increments, the rate of return can become eye-wateringly high on a risk-adjusted basis: Sharpe Ratios of 10, or more, are commonly achieved with HFT strategies.

In many cases an HFT algorithm seeks to estimate the conditional probability of an uptick or downtick in the underlying, leaning on the bid or offer price accordingly. Provided orders can be positioned towards the front of the queue to ensure an adequate fill rate, the laws of probability will do the rest. So, in the HFT context, much effort is expended on mitigating latency and on developing techniques for establishing and maintaining priority in the limit order book. Another major concern is to monitor order book dynamics for signs that book pressure may be moving against any open orders, so that they can be cancelled in good time, avoiding adverse selection by informed traders, or a buildup of unwanted inventory.

In a high frequency scalping strategy one is typically looking to capture an average of between 1/2 to 1 tick per trade. For example, the VIX scalping strategy illustrated here averages around $23 per contract per trade, i.e. just under 1/2 a tick in the futures contract. Trade entry and exit is effected using limit orders, since there is no room to accommodate slippage in a trading system that generates less than a single tick per trade, on average. As with most HFT strategies the alpha algorithms are only moderately sophisticated, and the strategy is highly dependent on achieving an acceptable fill rate (the proportion of limit orders that are executed). The importance of achieving a high enough fill rate is clearly illustrated in the first of the two posts referenced above. So what is an acceptable fill rate for a HFT strategy?

Fill Rates

I’m going to address the issue of fill rates by focusing on a critical subset of the problem: fills that occur at the extreme of the bar, also known as “extreme hits”. These are limit orders whose prices coincide with the highest (in the case of a sell order) or lowest (in the case of a buy order) trade price in any bar of the price series. Limit orders at prices within the interior of the bar are necessarily filled and are therefore uncontroversial. But limit orders at the extremities of the bar may or may not be filled and it is therefore these orders that are the focus of attention.

By default, most retail platform backtest simulators assume that all limit orders, including extreme hits, are filled if the underlying trades there. In other words, these systems typically assume a 100% fill rate on extreme hits. This is highly unrealistic: in many cases the high or low of a bar forms a turning point that the price series visits only fleetingly before reversing its recent trend, and does not revisit for a considerable time. The first few orders at the front of the queue will be filled, but many, perhaps the majority of, orders further down the priority order will be disappointed. If the trader is using a retail trading system rather than a HFT platform to execute his trades, his limit orders are almost always guaranteed to rest towards the back of the queue, due to the relatively high latency of his system. As a result, a great many of his limit orders – in particular, the extreme hits – will not be filled.

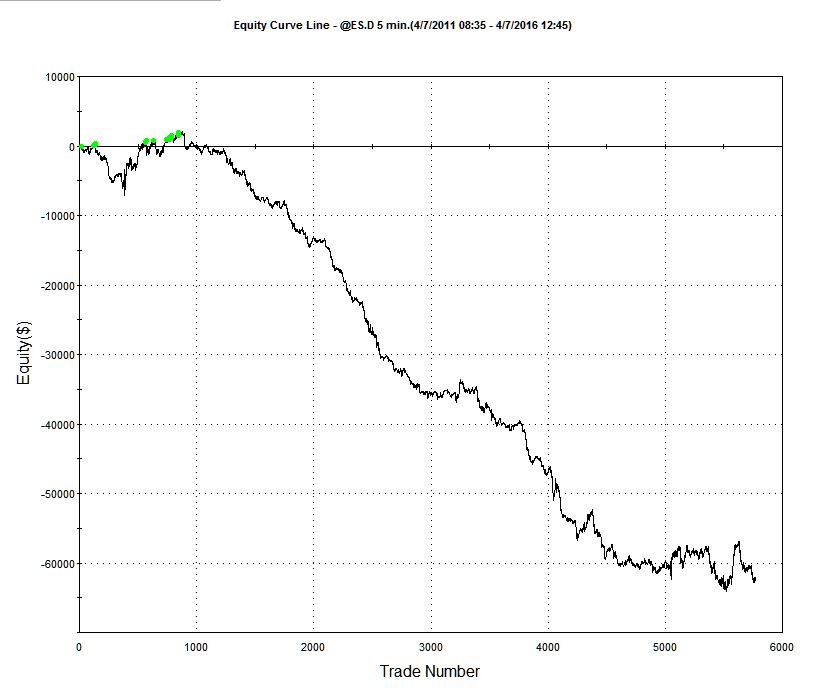

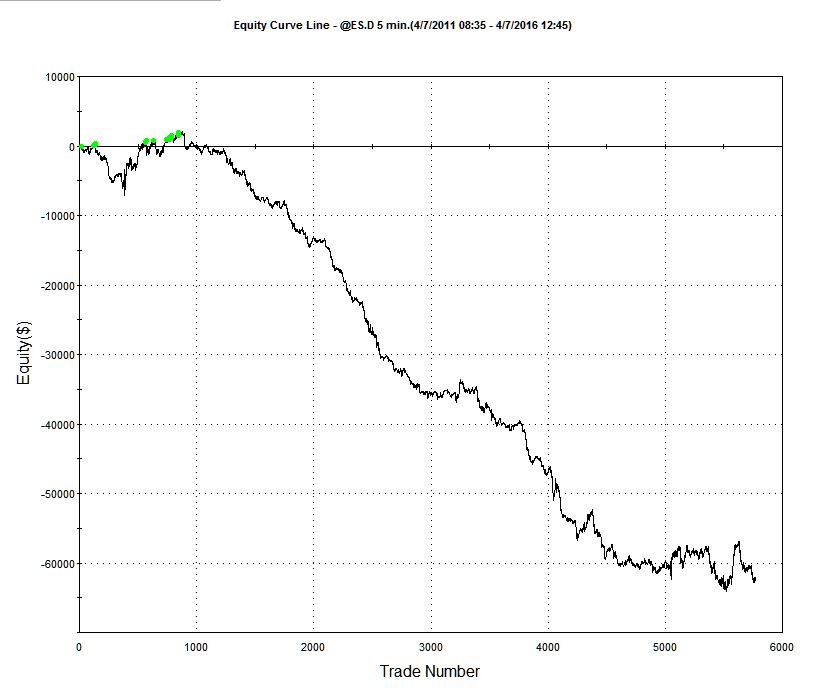

The consequences of missing a large number of trades due to unfilled limit orders are likely to be catastrophic for any HFT strategy. A simple test that is readily available in most backtest systems is to change the underlying assumption with regard to the fill rate on extreme hits – instead of assuming that 100% of such orders are filled, the system is able to test the outcome if limit orders are filled only if the price series subsequently exceeds the limit price. The outcome produced under this alternative scenario is typically extremely adverse, as illustrated in first blog post referenced previously.

In reality, of course, neither assumption is reasonable: it is unlikely that either 100% or 0% of a strategy’s extreme hits will be filled – the actual fill rate will likely lie somewhere between these two outcomes. And this is the critical issue: at some level of fill rate the strategy will move from profitability into unprofitability. The key to implementing a HFT scalping strategy successfully is to ensure that the execution falls on the right side of that dividing line.

Implementing HFT Scalping Strategies in Practice

One solution to the fill rate problem is to spend millions of dollars building HFT infrastructure. But for the purposes of this post let’s assume that the trader is confined to using a retail trading platform like Tradestation or Interactive Brokers. Are HFT scalping systems still feasible in such an environment? The answer, surprisingly, is a qualified yes – by using a technique that took me many years to discover.

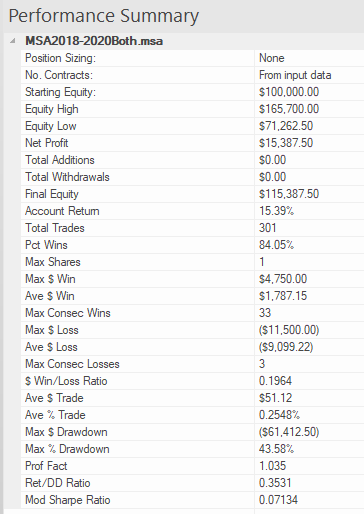

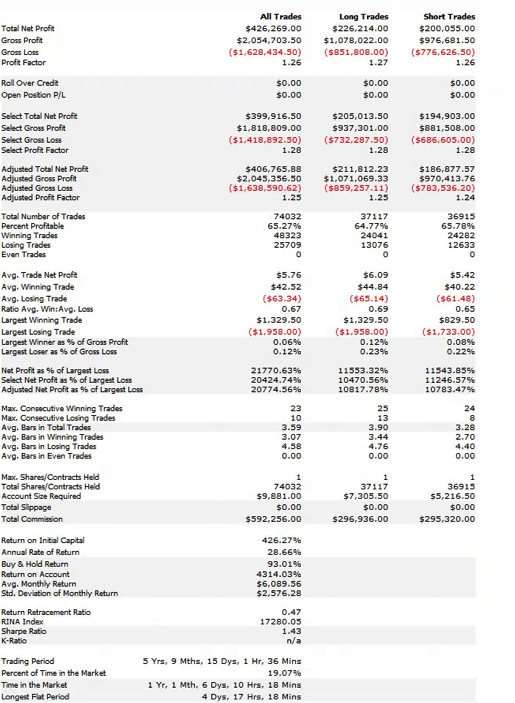

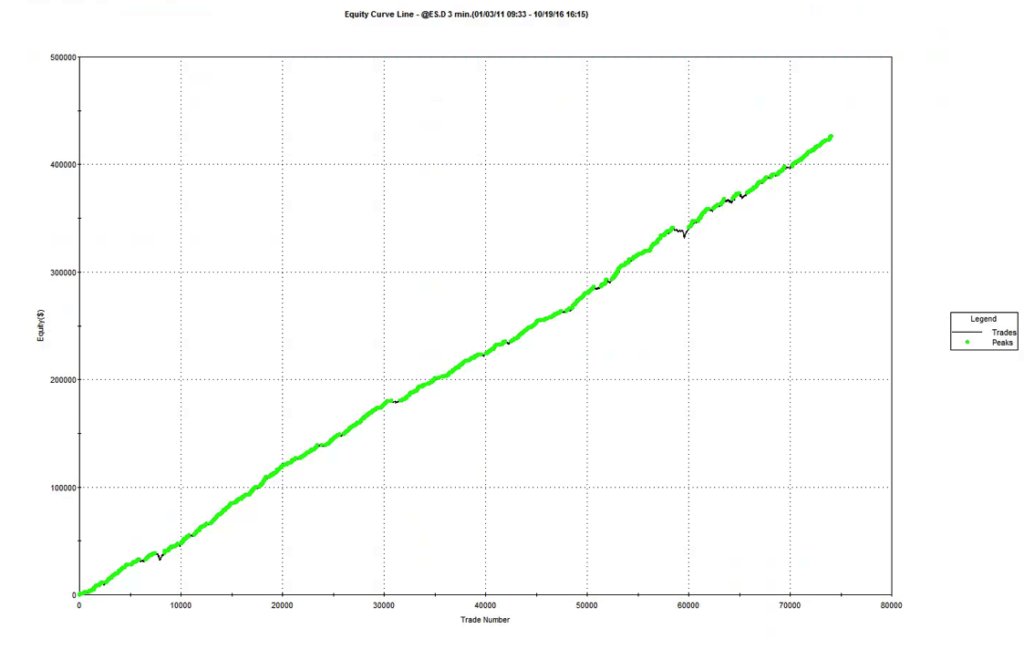

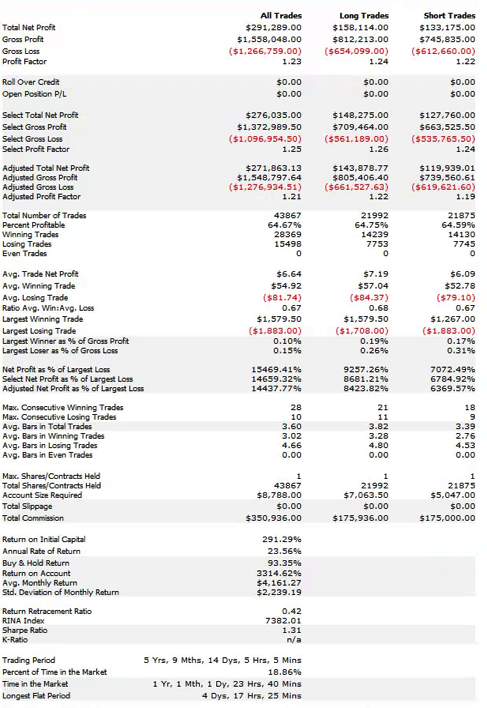

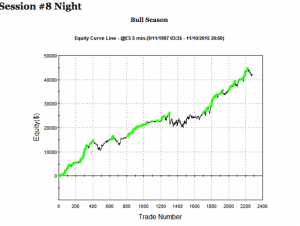

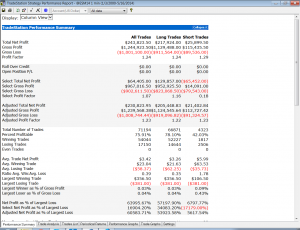

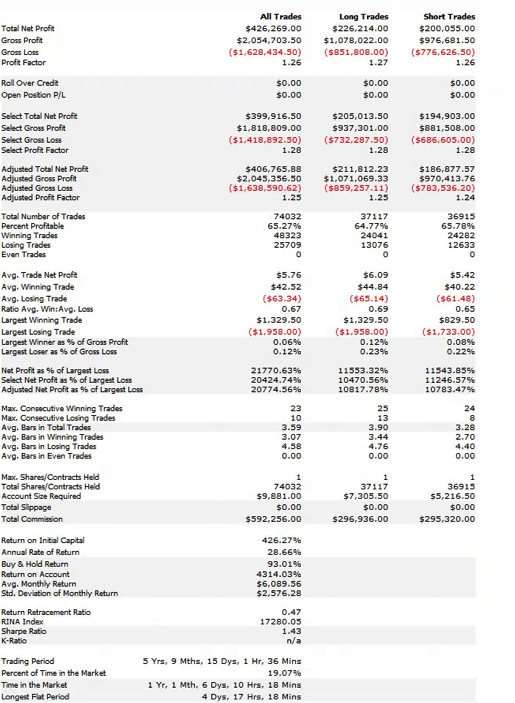

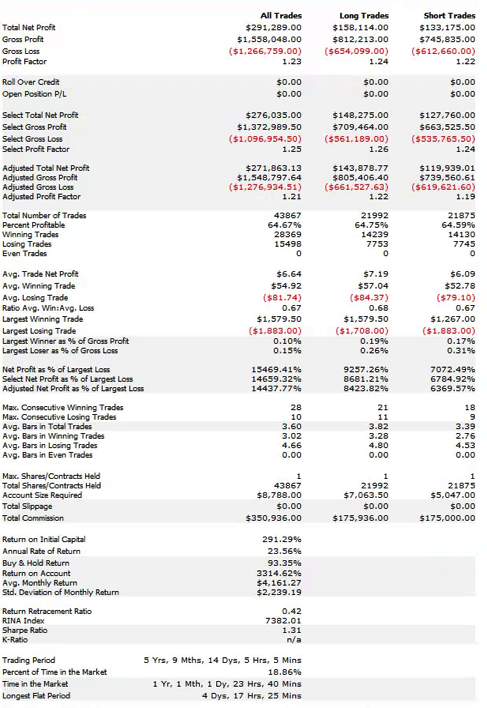

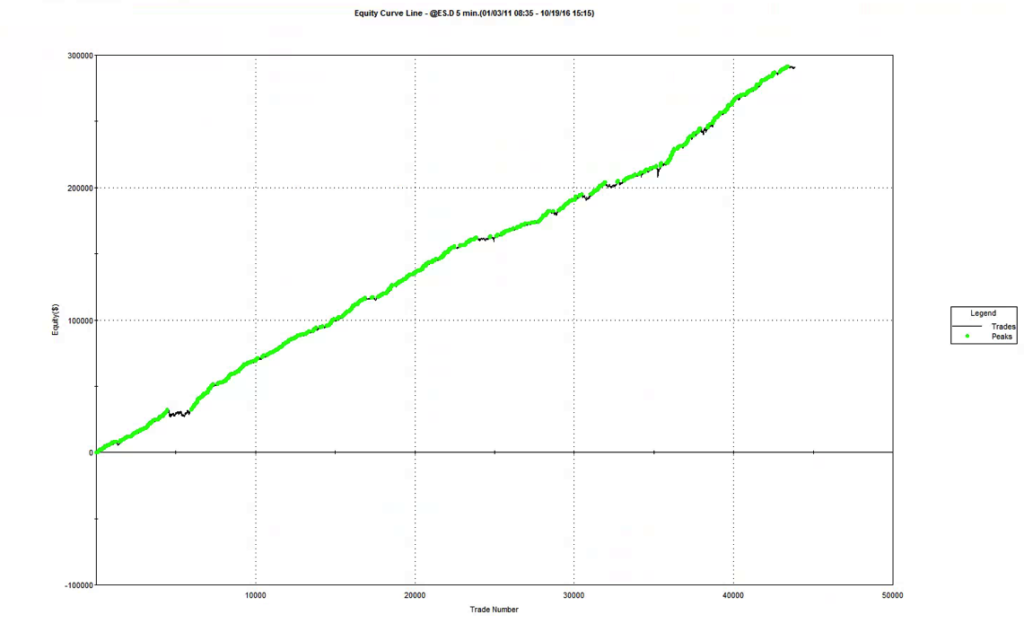

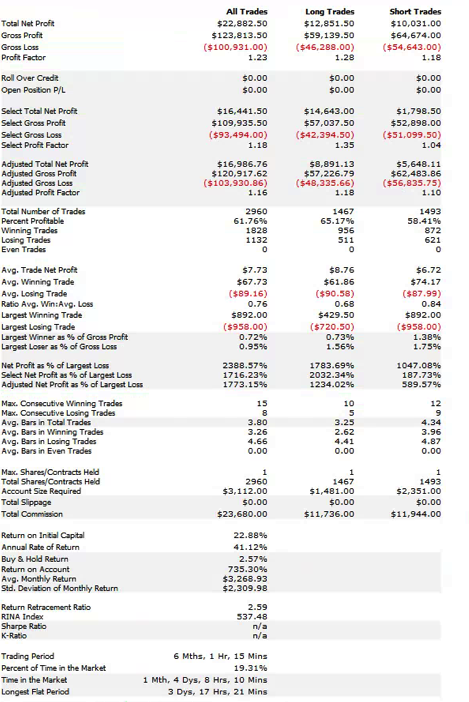

To illustrate the method I will use the following HFT scalping system in the E-Mini S&P500 futures contract. The system trades the E-Mini futures on 3 minute bars, with an average hold time of 15 minutes. The average trade is very low – around $6, net of commissions of $8 prt. But the strategy appears to be highly profitable ,due to the large number of trades – around 50 to 60 per day, on average.

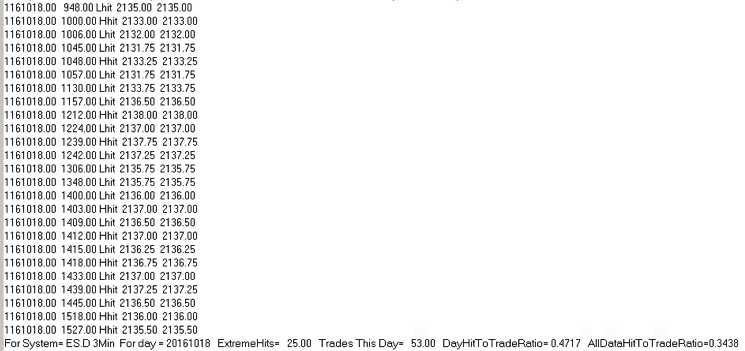

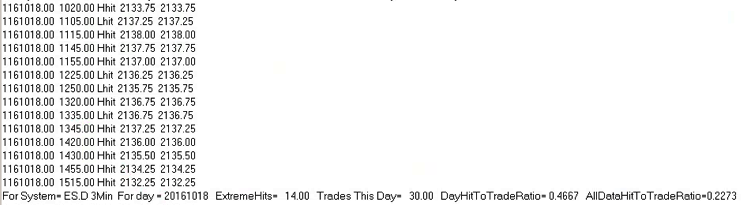

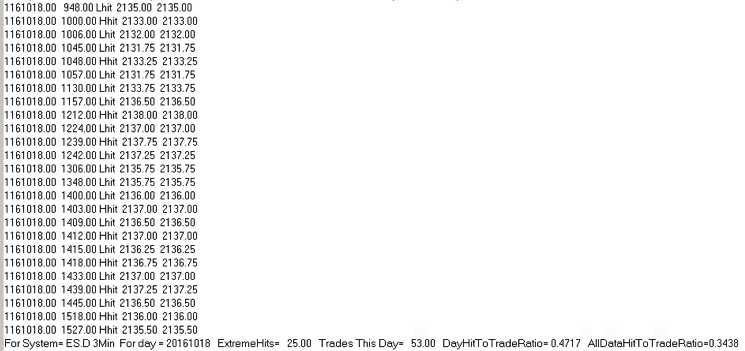

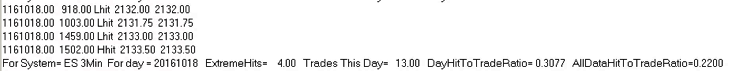

So far so good. But the critical issue is the very large number of extreme hits produced by the strategy. Take the trading activity on 10/18 as an example (see below). Of 53 trades that day, 25 (47%) were extreme hits, occurring at the high or low price of the 3-minute bar in which the trade took place.

Overall, the strategy extreme hit rate runs at 34%, which is extremely high. In reality, perhaps only 1/4 or 1/3 of these orders will actually execute – meaning that remainder, amounting to around 20% of the total number of orders, will fail. A HFT scalping strategy cannot hope to survive such an outcome. Strategy profitability will be decimated by a combination of missed, profitable trades and losses on trades that escalate after an exit order fails to execute.

So what can be done in such a situation?

Manual Override, MIT and Other Interventions

One approach that will not work is to assume naively that some kind of manual oversight will be sufficient to correct the problem. Let’s say the trader runs two versions of the system side by side, one in simulation and the other in production. When a limit order executes on the simulation system, but fails to execute in production, the trader might step in, manually override the system and execute the trade by crossing the spread. In so doing the trader might prevent losses that would have occurred had the trade not been executed, or force the entry into a trade that later turns out to be profitable. Equally, however, the trader might force the exit of a trade that later turns around and moves from loss into profit, or enter a trade that turns out to be a loser. There is no way for the trader to know, ex-ante, which of those scenarios might play out. And the trader will have to face the same decision perhaps as many as twenty times a day. If the trader is really that good at picking winners and cutting losers he should scrap his trading system and trade manually!

An alternative approach would be to have the trading system handle the problem, For example, one could program the system to convert limit orders to market orders if a trade occurs at the limit price (MIT), or after x seconds after the limit price is touched. Again, however, there is no way to know in advance whether such action will produce a positive outcome, or an even worse outcome compared to leaving the limit order in place.

In reality, intervention, whether manual or automated, is unlikely to improve the trading performance of the system. What is certain, however, is that by forcing the entry and exit of trades that occur around the extreme of a price bar, the trader will incur additional costs by crossing the spread. Incurring that cost for perhaps as many as 1/3 of all trades, in a system that is producing, on average less than half a tick per trade, is certain to destroy its profitability.

Successfully Implementing HFT Strategies on a Retail Platform

For many years I assumed that the only solution to the fill rate problem was to implement scalping strategies on HFT infrastructure. One day, I found myself asking the question: what would happen if we slowed the strategy down? Specifically, suppose we took the 3-minute E-Mini strategy and ran it on 5-minute bars?

My first realization was that the relative simplicity of alpha-generation algorithms in HFT strategies is an advantage here. In a low frequency context, the complexity of the alpha extraction process mitigates its ability to generalize to other assets or time-frames. But HFT algorithms are, by and large, simple and generic: what works on 3-minute bars for the E-Mini futures might work on 5-minute bars in E-Minis, or even in SPY. For instance, if the essence of the algorithm is something as simple as: “buy when the price falls by more than x% below its y-bar moving average”, that approach might work on 3-minute, 5-minute, 60-minute, or even daily bars.

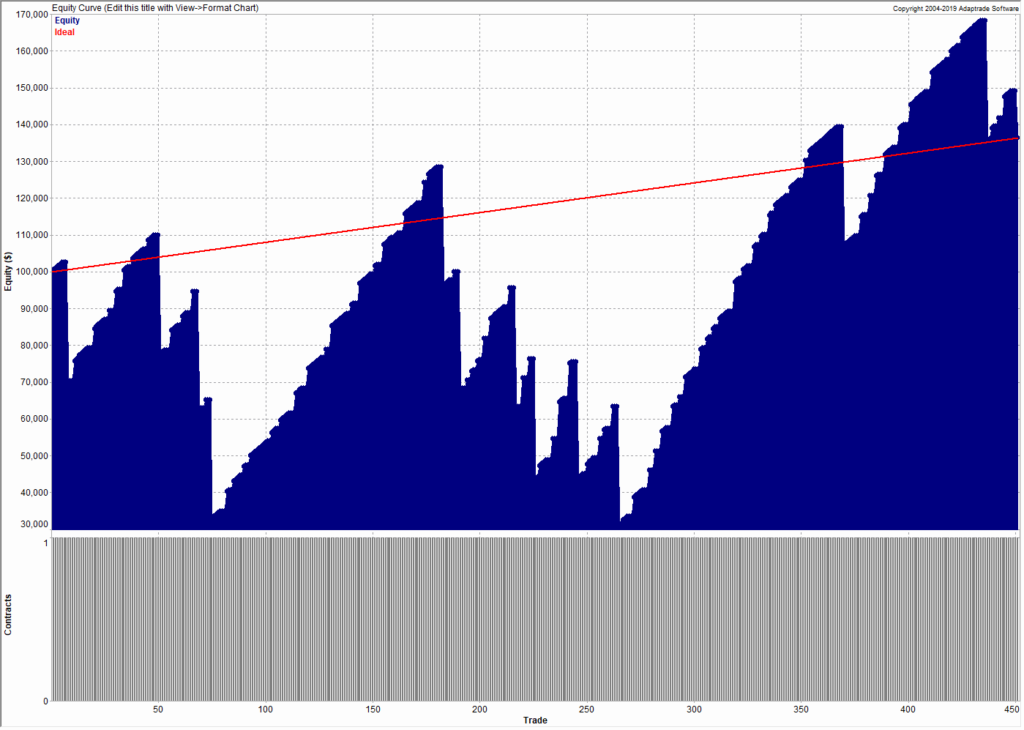

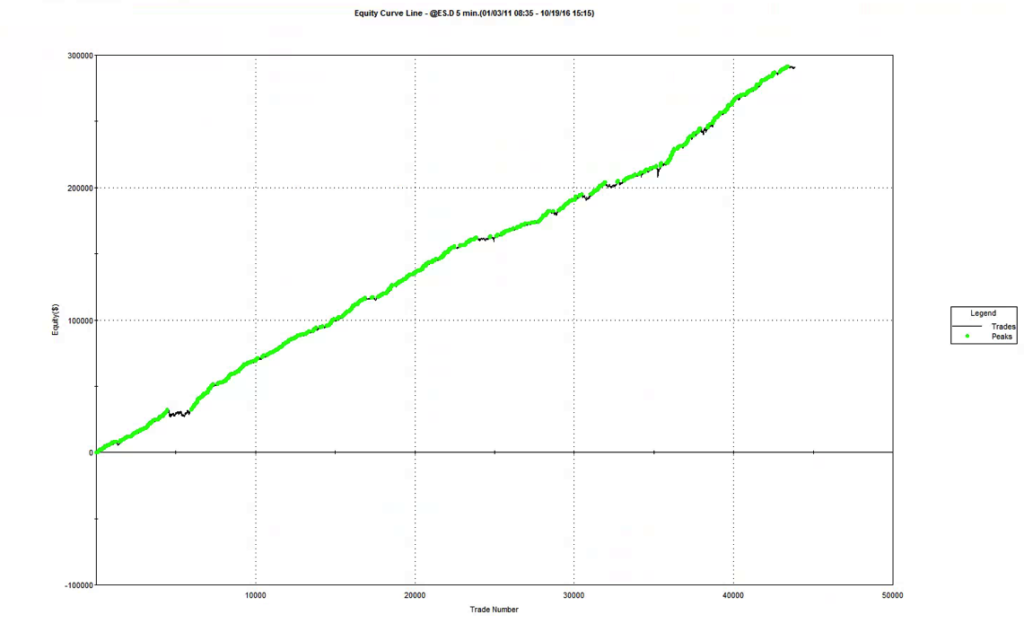

So what happens if we run the E-mini scalping system on 5-minute bars instead of 3-minute bars?

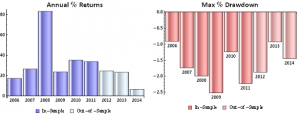

Obviously the overall profitability of the strategy is reduced, in line with the lower number of trades on this slower time-scale. But note that average trade has increased and the strategy remains very profitable overall.

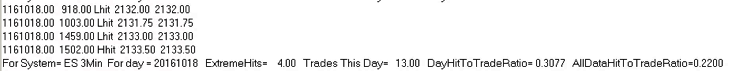

More importantly, the average extreme hit rate has fallen from 34% to 22%.

Hence, not only do we get fewer, slightly more profitable trades, but a much lower proportion of them occur at the extreme of the 5-minute bars. Consequently the fill-rate issue is less critical on this time frame.

Of course, one can continue this process. What about 10-minute bars, or 30-minute bars? What one tends to find from such experiments is that there is a time frame that optimizes the trade-off between strategy profitability and fill rate dependency.

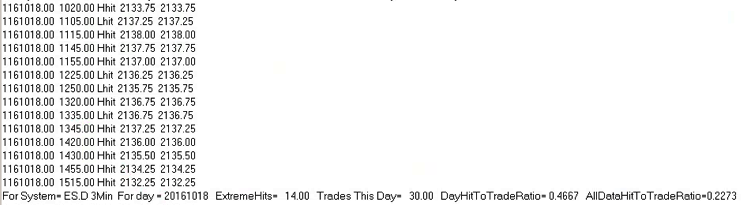

However, there is another important factor we need to elucidate. If you examine the trading record from the system you will see substantial variation in the extreme hit rate from day to day (for example, it is as high as 46% on 10/18, compared to the overall average of 22%). In fact, there are significant variations in the extreme hit rate during the course of each trading day, with rates rising during slower market intervals such as from 12 to 2pm. The important realization that eventually occurred to me is that, of course, what matters is not clock time (or “wall time” in HFT parlance) but trade time: i.e. the rate at which trades occur.

Wall Time vs Trade Time

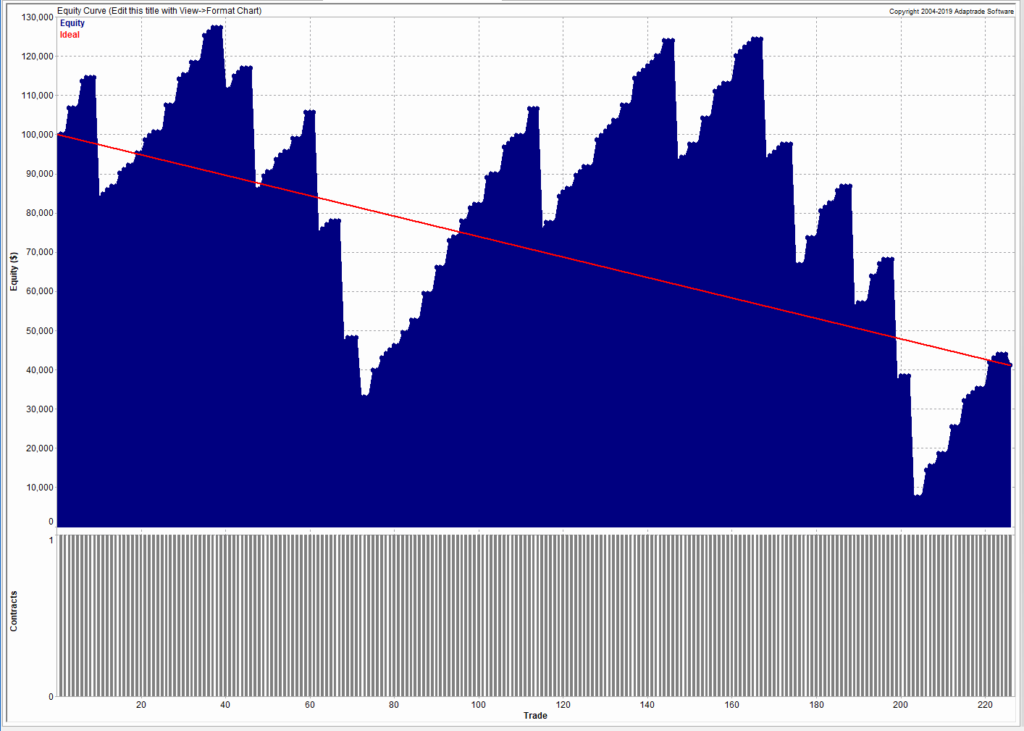

What we need to do is reconfigure our chart to show bars comprising a specified number of trades, rather than a specific number of minutes. In this scheme, we do not care whether the elapsed time in a given bar is 3-minutes, 5-minutes or any other time interval: all we require is that the bar comprises the same amount of trading activity as any other bar. During high volume periods, such as around market open or close, trade time bars will be shorter, comprising perhaps just a few seconds. During slower periods in the middle of the day, it will take much longer for the same number of trades to execute. But each bar represents the same level of trading activity, regardless of how long a period it may encompass.

How do you decide how may trades per bar you want in the chart?

As a rule of thumb, a strategy will tolerate an extreme hit rate of between 15% and 25%, depending on the daily trade rate. Suppose that in its original implementation the strategy has an unacceptably high hit rate of 50%. And let’s say for illustrative purposes that each time-bar produces an average of 1, 000 contracts. Since volatility scales approximately with the square root of time, if we want to reduce the extreme hit rate by a factor of 2, i.e. from 50% to 25%, we need to increase the average number of trades per bar by a factor of 2^2, i.e. 4. So in this illustration we would need volume bars comprising 4,000 contracts per bar. Of course, this is just a rule of thumb – in practice one would want to implement the strategy of a variety of volume bar sizes in a range from perhaps 3,000 to 6,000 contracts per bar, and evaluate the trade-off between performance and fill rate in each case.

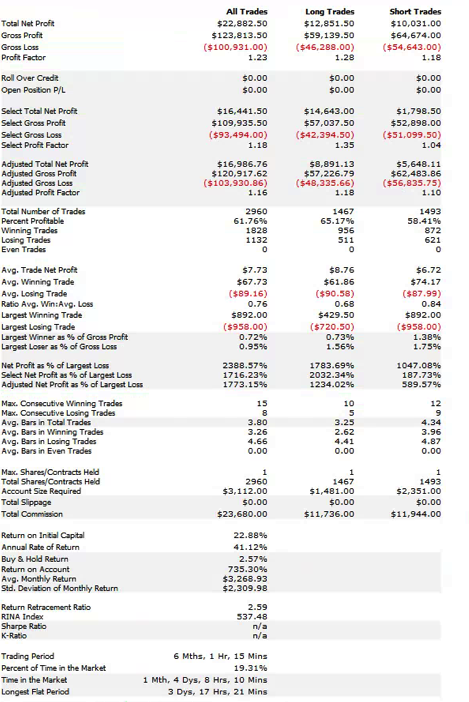

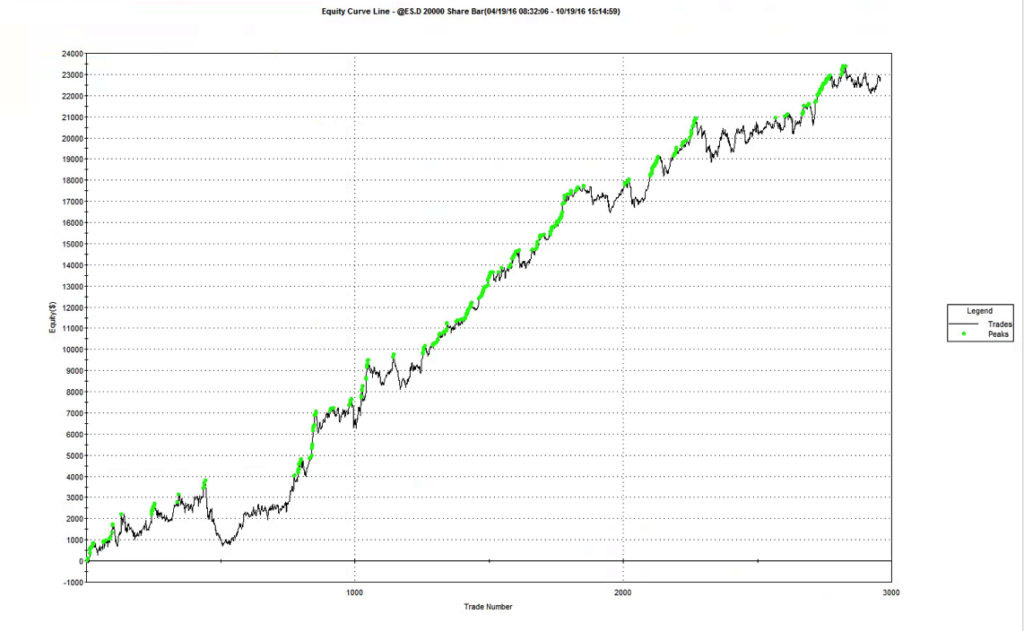

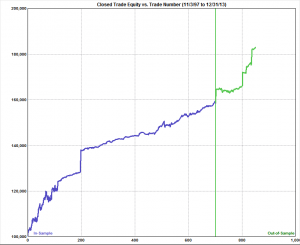

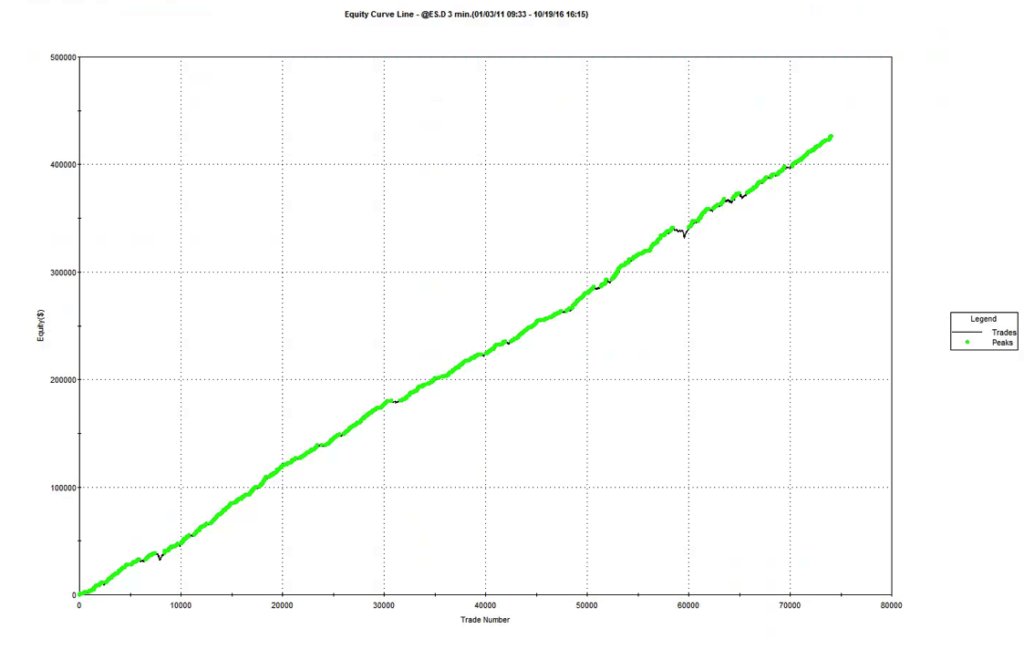

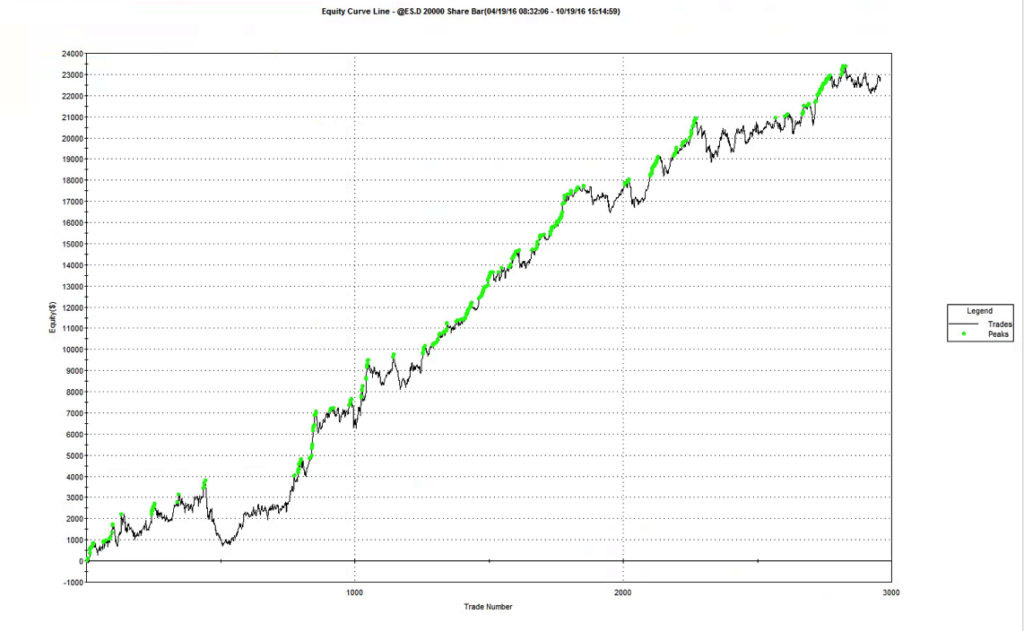

Using this approach, we arrive at a volume bar configuration for the E-Mini scalping strategy of 20,000 contracts per bar. On this “time”-frame, trading activity is reduced to around 20-25 trades per day, but with higher win rate and average trade size. More importantly, the extreme hit rate runs at a much lower average of 22%, which means that the trader has to worry about maybe only 4 or 5 trades per day that occur at the extreme of the volume bar. In this scenario manual intervention is likely to have a much less deleterious effect on trading performance and the strategy is probably viable, even on a retail trading platform.

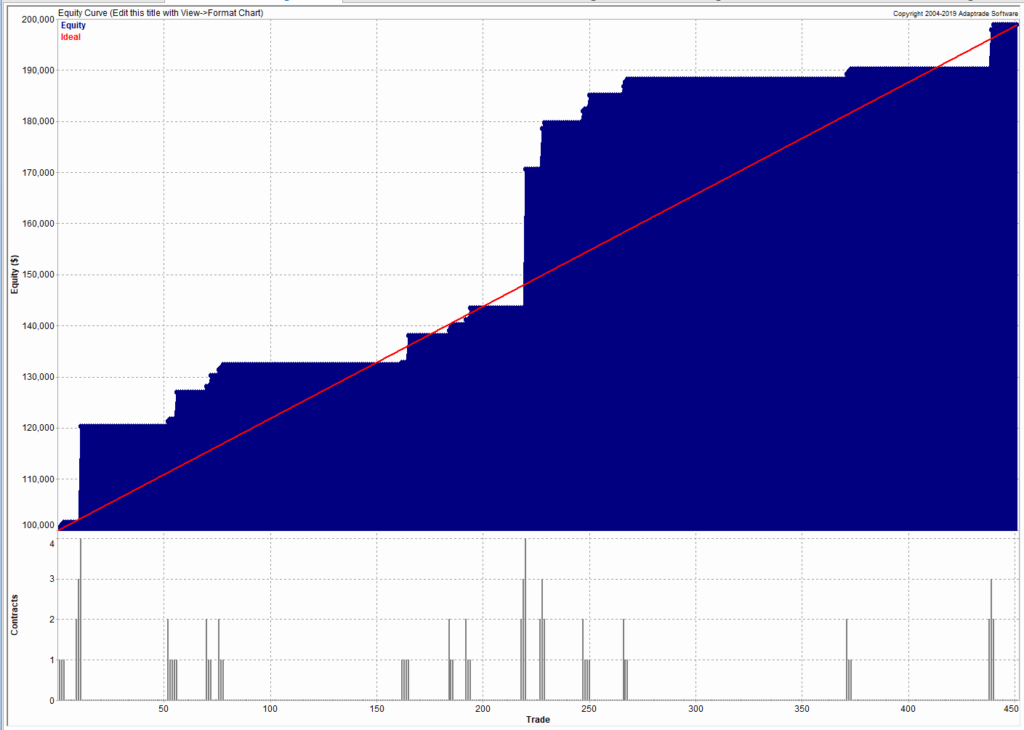

(Note: the results below summarize the strategy performance only over the last six months, the time period for which volume bars are available).

Concluding Remarks

We have seen that is it feasible in principle to implement a HFT scalping strategy on a retail platform by slowing it down, i.e. by implementing the strategy on bars of lower frequency. The simplicity of many HFT alpha generation algorithms often makes them robust to generalization across time frames (and sometimes even across assets). An even better approach is to use volume bars, or trade-time, to implement the strategy. You can estimate the appropriate bar size using the square root of time rule to adjust the bar volume to produce the requisite fill rate. An extreme hit rate if up to 25% may be acceptable, depending on the daily trade rate, although a hit rate in the range of 10% to 15% would typically be ideal.

Finally, a word about data. While necessary compromises can be made with regard to the trading platform and connectivity, the same is not true for market data, which must be of the highest quality, both in terms of timeliness and completeness. The reason is self evident, especially if one is attempting to implement a strategy in trade time, where the integrity and latency of market data is crucial. In this context, using the data feed from, say, Interactive Brokers, for example, simply will not do – data delivered in 500ms packets in entirely unsuited to the task. The trader must seek to use the highest available market data feed that he can reasonably afford.

That caveat aside, one can conclude that it is certainly feasible to implement high volume scalping strategies, even on a retail trading platform, providing sufficient care is taken with the modeling and implementation of the system.