In a previous post I discussed modelling stock prices processes as Geometric brownian Motion processes:

Understanding Stock Price Range Forecasts

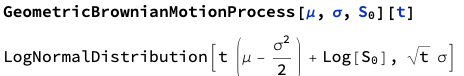

To recap briefly, we assume a process of the form:

Where S0 is the initial stock price at time t = 0.

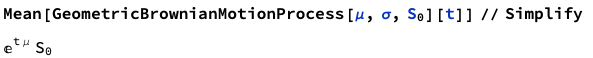

The mean of such a process is:

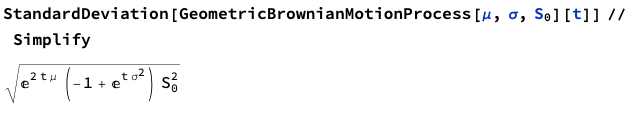

and standard deviation:

In the post I showed how to estimate such a process with daily stock prices, using these to provide a forecast range of prices over a one-month horizon. This is potentially useful, for example, in choosing which strikes to select in an option hedge.

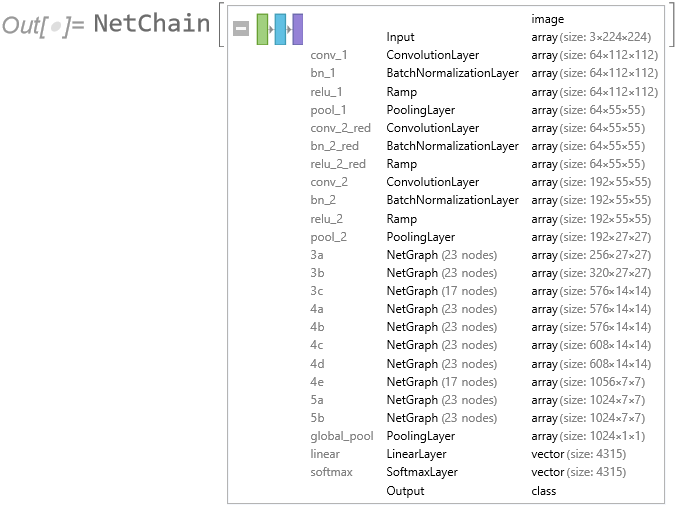

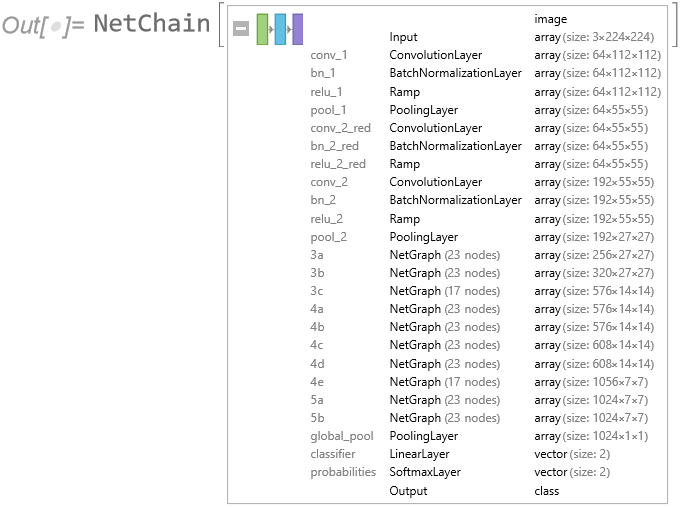

Of course, there is nothing to prevent you from using the same technique over different timescales. Here I use the MATH-TWS package to connect Mathematica to the IB TWS platform via the C++ api, to extract intraday prices for the S&P 500 Index at 1-minute intervals. These are used to estimate a short-term GBM process, which provides forecasts of the mean and variance of the index at the 4 PM close.

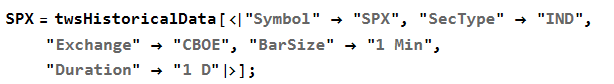

We capture the data using:

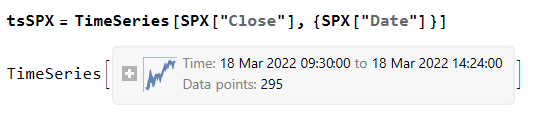

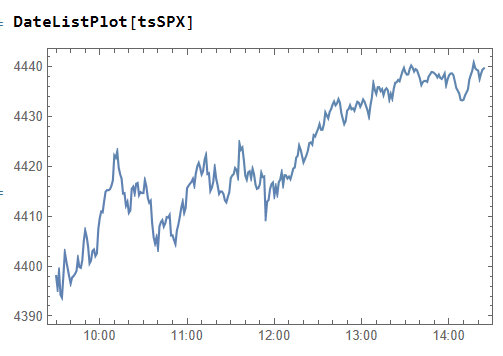

then create a time series of the intraday prices and plot them:

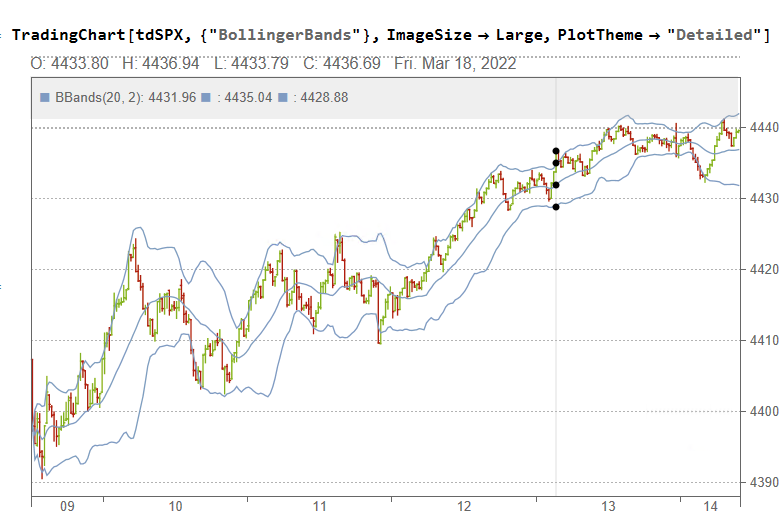

If we want something a little fancier we can create a trading chart, including technical indicators of our choice, for instance:

The charts can be updated in real time from IB, using MATHTWS.

From there we estimate a GBM process using 1-minute close prices:

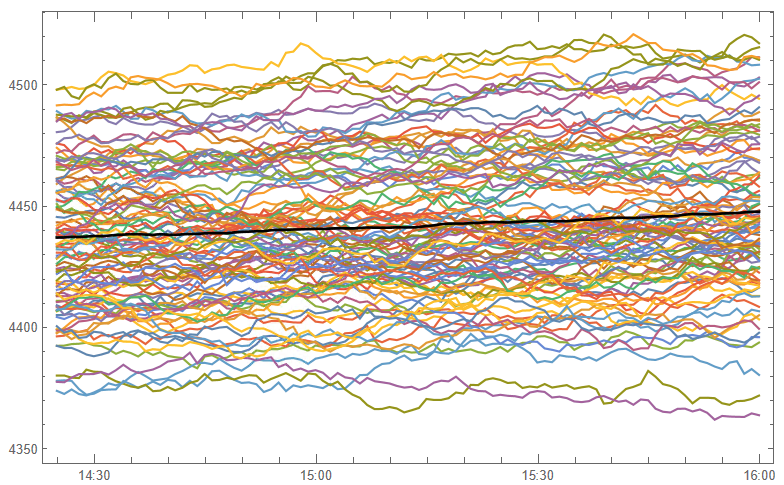

and then simulate a number of price paths towards the 4 PM close (the mean price path is shown in black):

This indicates that the expected value of the SPX index at the close will be around 4450, which we could estimate directly from:

Where u is the estimated drift of the GBM process.

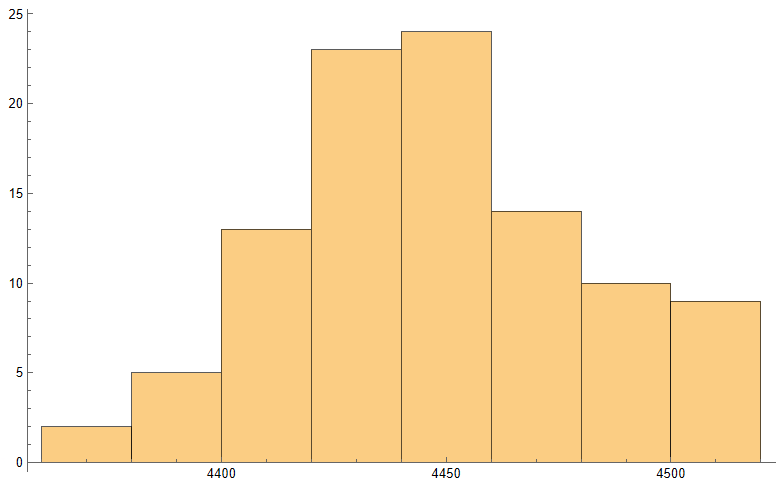

Similarly we can look at the projected terminal distribution of the index at 4pm to get a sense of the likely range of closing prices, which may assist a decision to open or close certain option (hedge) positions:

Of course, all this is predicated on the underlying process continuing on its current trajectory, with drift and standard deviation close to those seen in the process in the preceding time interval. But trends change, as do volatilities, which means that our forecasts may be inaccurate. Furthermore, the drift in asset processes tends to be dominated by volatility, especially at short time horizons.

So the best way to think of this is as a conditional expectation, i.e. “If the stock price continues on its current trajectory, then our expectation is that the closing price will be in the following range…”.

For more on MATH-TWS see:

MATH-TWS: Connecting Wolfram Mathematica to IB TWS