Building a deep learning neural network is a complicated and time-consuming task. For some problems it can be easier to re-purpose an existing deep learning model, making minor adjustments to a small number of layers to achieve desired architecture.

In his new book Introduction to Machine Learning, Etienne Bernard gives a nice example of the technique.

We begin with a dataset comprising images of two types of mushroom, with the aim of building a specialized image classifier.

Using a Neural Network for Feature Extraction

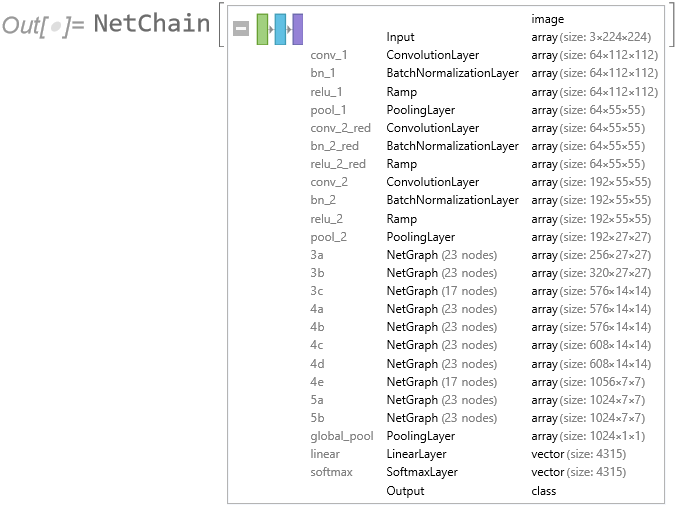

We start from the Wolfram ImageIdentify net, which is a 24-layer convolutional neural network trained on around 4000 image types.

net=NetModel[“Wolfram ImageIdentify Net V1”]

In its current form the net is too generalized for our task:

Mushroom

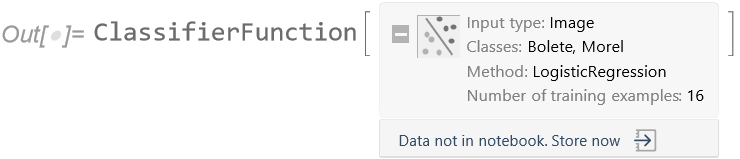

So instead of using the net in raw form, we use the first 22 layers as a feature extractor. This will preprocess each image to obtain features that are semantically richer than simple pixel values. We can then train a classifier on top of these new features, producing a logistic regression model:

c=Classify[dsTrain,FeatureExtractor->NetTake[net,22]]

The classifier can now distinguish between different types of mushrooms:

The classifier achieves around 88% accuracy on a test set constructed from a web image search:

dsTest = AssociationMap[

WebImageSearch[#, “Thumbnails”, 10] &, {“Morel”,

“Bolete”}] /. $Failed -> Nothing // Quiet;

ClassifierMeasurements[c, test, “Accuracy”,

ComputeUncertainty -> True]

0.88 +/- 0.09

This is much better than training directly on the underlying pixel values, which would result in an accuracy of around 44%, worse than random guessing.

Neural Network Transfer Learning

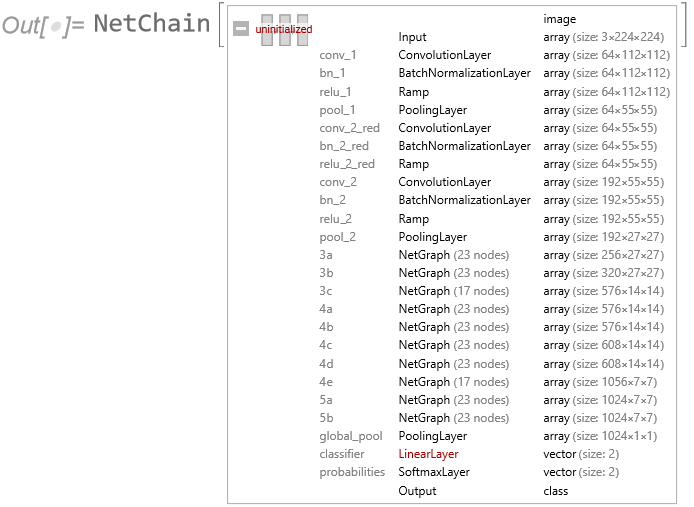

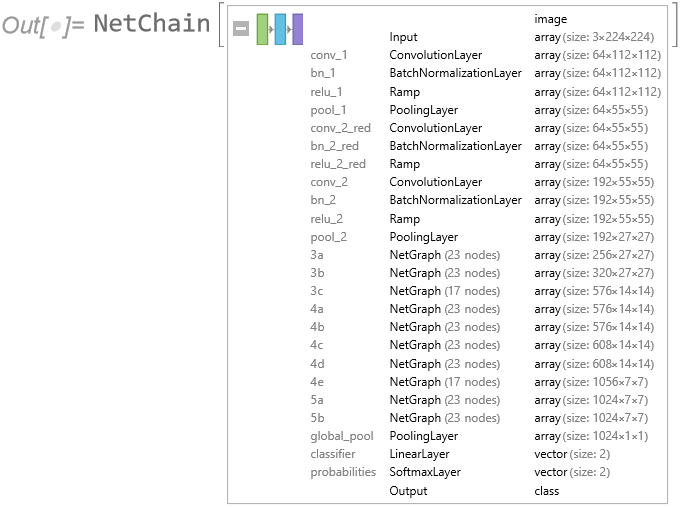

Moving on from Etienne’s feature extraction approach, we next try creating a new neural network by re-using the first 22 layers of the ImageIdentify network, adding two new layers (a LinearLayer and SoftmaxLayer) for mushroom classification:

changedNet=Drop[net,-2];

newNet=NetJoin[changedNet,NetChain[Association[“classifier”->LinearLayer[],”probabilities”->SoftmaxLayer[]],”Output”->NetDecoder[{“Class”,{“Bolete”,”Morel”}}]]]

We re-train the new network using the mushroom training dataset, holding the weights for the first 22 layers at their existing values (i.e. only training the last two, new layers):

trainedNet=NetTrain[newNet,Normal@dsTrain,LearningRateMultipliers->{“classifier”->1,_->0},BatchSize->16,TargetDevice->”GPU”]

Next, create a new test dataset and test the new neural network’s accuracy:

dsTest=AssociationMap[WebImageSearch[#,”Thumbnails”,10] &,{“Morel”,”Bolete”}] /. $Failed->Missing[] //Quiet;

The new net achieves 100% accuracy on the test dataset:

trainedNet[DeleteMissing@dsTest[“Bolete”]]

{“Bolete”, “Bolete”, “Bolete”, “Bolete”, “Bolete”, “Bolete”, \

“Bolete”, “Bolete”, “Bolete”}

trainedNet[DeleteMissing@dsTest[“Morel”]]

{“Morel”, “Morel”, “Morel”, “Morel”, “Morel”, “Morel”, “Morel”, \

“Morel”}